Three Big Boards.

An Enthusiastic Lament

Despite their wants to stand out from the crowd, be acknowledged within a heavily branded market, or respected by contemporaries as commanding the most meaty and majestic man cave assembled since a cave man’s fleshy fingers punched caught-ate-digest, the capricious community of PC Enthusiasts could be casually divided into two breeds, those with pounds to invest and a purpose to survive and the rest, best bang for the buck, but more bangs between bucks.

The net result? A dearth of debates, boatloads of benchmarks, a glut of gaming glory and gloom and, once the dust has settled amidst the battered blades and crippled bearings of a million spin-sodden case fans, nether a cent nor a penny to show for a car boot CRT.

I hasten to assert that what you’ve just read doesn’t constitute a sneaky session of Anglo/American pot poking, but rather a heartfelt attempt to graciously unify two fascinating species, each enriched through years of evolution and immeasurable experience with stories, facts, opinions and information of the utmost value and intrigue.

Take me for example. One clement Spring morning in 2013. Ah! Remember then? Back in the day the 2010’s? All that online business, apps and social media and stuff, Twitter, Facebook, it seemed so natural, so trendy to flaunt a phone with a screen the size of a Plasma, a bit the 80s. Ah! Remember then? Back in the day? Yuppies and Puffballs? Atari, Amstrad, Amiga, sharing games and music. It seemed so stylish, so rewarding to flash a phone the size of a breeze block and use it in a car. Ah! Cars, remember them? Those things with four wheels and an engine? Sorry, what year is this again?

Back on topic, the dawn I speak of was my Birthday and being lasered from the wafer of Enthusiasts with long-term survival instincts, I made what I hoped would be an investment that afforded me performance, stability, a hint of pride and a freedom from compulsions to tinker for several years, if not longer.

Barely a year and a half later and my mind, conscience, eyes and ears were beginning to waywardly wander websites in reluctant but relentless search of reviews and prices for key components I knew would be faster, more efficient and better value.

Having surveyed all I felt my precious ego could bear, an evening of nauseating self-reassurance commenced. Don’t worry, hold out, your decision was wise, judicious and well-informed. Cherish what you built. Allow it to grow and develop character. Avail its devotion to you, the creator. Work is paramount and downtime disastrous. Draw from its stability and be honoured to harness the prodigious power it continues to provide. Don’t macerate your masterpiece for the sake of a dozen dreary frames…don’t, even for a fleeting moment, be one of them.

The reason for this desperate divulgence stemmed from a need to cordially convey a comparison between three systems of a similar pedigree but hailing from different generations. Each motherboard, a conceptual creature with a server grade heritage, targeting players, primers and miners alike, with a fervent intent to establish the ultimate solution for business and pleasure.

System 1:

Motherboard: Intel D5400XS “Skulltrail”

CPUs: 2x QX9775 3.2ghz Socket 771 150W 45mn Quad Core “Yorkfiled” XE no Hyperthreading 12mb cache.

Memory: 8MB 800mhz FB-Dimms (Fully Buffered)

Video Card: Nvidia Geforce GTX 580

Hard Drives WD-Raptors (10.000rpm)

Heatsinks 2x Noctua NH-u12p 2x NF-12 fans, 1 per heatsink.

System 2:

Motherboard: EVGA SR-2

CPU: 2x Intel Xeon x5680 Socket 1336 3.33ghz Hex-Core 130W 32mn “Westmere” with Hyperthreading 12mb cache.

Video Card: Nvidia Geforce GTX 580.

Memory: 6x 2GB Crucial ECC DIMMS 1333mhz.

Hard Drives WD-Raptor (10.000rpm).

Heatsinks 2x Noctua NH-u12p 2x NF-12 fans, 1 per heatsink.

System 3:

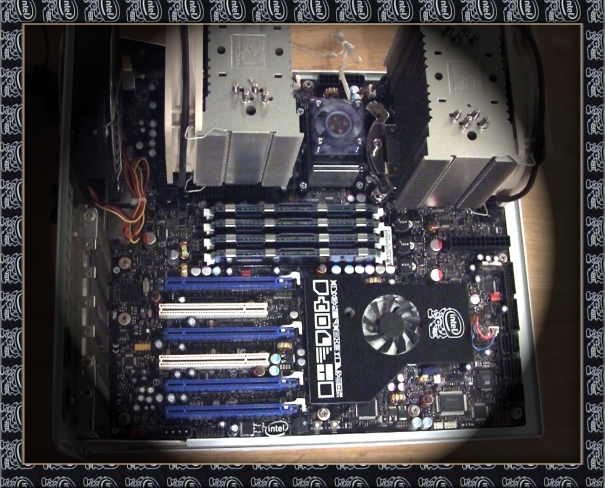

Motherboard: EVGA SR-X

CPU: 2x Intel Xeon 2697 V2 Socket 2011 2.7ghz 22mn Twelve Core with Hyperthreading 130W 30MB Cache.

Video Card: 3x Nvidia Geforce GTX Titan.

Memory: 8x 2GB Crucial ECC DIMMS 1333mhz

Hard Drives 4x Samsung 256mb SSDs

Heatsinks 2x Noctua NH-uDH14 2x NF-p14 fans, 1 per heatsink.

So there they stand, our terrifying trio of devastating duality. A total of forty cores and seventy six threads, enough processing power to match that of an entire iPhone 9 – the preceding claim shall be suitably edited to reflect the truth as defined by time and Tim Cook, subject to the author still harbouring the requisite health. The benchmark I chose to challenge this titanic threesome was Maxon’s redoubtable Cinbench rendering romp.

In keeping with long standing tradition, conditions were a little off lab perfect and I pray my excuses merit some empathy. As do ships in the night, these systems passed through different eras of my existence Some parts were my own, others on loan and the rest, sold to finance more pressing priorities.

Though the application version and test conducted was the same for all three configurations, The “Skulltrail’s” QX9775’s were not capable of Hyper Threading, while the Westmere and Ivy Bridge based Xeons occupying the SR-2 and SR-x supported the feature, with both boards BIOS’ enabling it by default. Had I envisaged compiling this video sooner, I’d have disabled it to promote the most level playing field possible.

In addition, Cinbench R11.5 was acting its age on the SRX build and appears to identify only 32 threads of the 48 present. I re-ran the test entering the value manually but without remedy. The third time I checked the task manager and verified that all physical cores and virtual threads were in fact being exploited hence, the bug is evidently limited to the UI and the result can be treated as legitimate. Maxton recently released Cinbench R15 which resolves this anomaly. Anyway here it is…hope you enjoy it!

Skulltrail Vs. SR-2 Vs. SR-X

Get the Flash Player to see this player.A tick=a die shrink and a “tock” an architectural transition.

No prizes for guessing the result but what would a benchmark be if not accompanied by ambiguous analytical claptrap. Three cores, three eras and three thunderous strikes from Intel the Devine’s die defining cuckoo clock.

Let’s see where our flagships belong. The quad core QX9775, released in 2008, was one of seven chips to bear the codename “Yorkfield”, and symbolized the summit of Intel’s 45mn “Penryn” design, usurping the 65mn “Kensfields” from the previous “Core” generation to a resounding “tick”.

Casual enthusiasts rarely distinguished the processor from the QX9770 despite its exclusivity to the “Skulltrail” platform. In fact, both these processors and their Xeon counterpart, the x5482, shared identical default speeds, cache, FSB frequency, pin layouts and all else besides several telling discrepancies.

The X5482 or “Harptertown”, was a standard Xeon equipped to function in pairs and bred solely for the server market. By contrast, the QX9770 was a desktop class processor aimed at the enthusiast and armed as was tradition, with an unlocked multiplier.

Though the sockets for each processor appeared identical to the nonchalant eye, the 775, common to all desktop boards of the age, was limited to a TDP of 136 watts and could only operate as a single entity, whilst the 771 soldered to Xeon motherboards supplied a maximum of 150 watts and was keyed with notches several millimetres higher to avoid erroneous installation. The QX9775 aptly combined the Xeon’s socket, higher power envelop and ability to partner up with a prodigious quartet of unencumbered cores.

Intel’s attempt to assemble a package that placated every species of performance hunter came during an era when a hauntingly familiar force commanded as much attention for its motherboards as for its video cards. Nvidia, Old Green Eyes himself, had directly solicited the Intel for a licence that granted chipsets forged within his own domain full compatibility with the core master’s finest creations.

Controversially, Intel readily accepted the proposal on the basis it would be mutual and afford him rights to exploit certain emerald trinkets in return. The deal amounted to each placing a loaded firearm into the palm of a potentially hostile rival and the resultant motherboards became the subject of debates that permeated forums filled with veteran desktop users faster than a forest fire.

The majority of feedback appeared positive until Netburst architecture shifted to “Core”. Then, rapidly, the notorious NForce chipset reaped fame for all the wrong reasons. Roasting north bridges, sluggish SATA controllers, vanishing RAID arrays and unstable over clocks, especially with “Yorkfileds”.

A spate of additional licencing issues led to the Skulltrail’s historical recognition as the first non-Nvidia mobo of the age able to implement Crossfire and SLI which, in a showcase of impish irony, it accomplished by fostering a pair of Nvidia’s bridge chips. Sadly, the Seaberg chipset upon which the X5400 was based, also suffered serious impediments., the worst was its inherent dependence on expensive fully buffered RAM, more scarce and far less flexible than conventional DDR2 and hot enough to necessitate active cooling. Had Intel elected to address this crucial flaw with customary diligence, their design would have cultivated new genre of motherboard.

If customer feedback dictated the outcome of this comparison, EVGA’s SR2 would waltz over the line. To early adopters who’d anticipated the “Skulltrail” would spawn a sequel and others poised to poach the pleasures of a industrial grade toy for the tireless tweaker, it was the only feasible remedy and despite having to manipulate vanilla Xeons in a state of lock-down, used its barn-burning B clock, high performance DDR3 DIMMS and Intel’s modest “Turbo Boost” allowance to attain spectacular results. Like the D5400XS, two Nvidia bridge chips – the later NF200 – effectively doubled its reserve of PCI Express lanes and provided enough bandwidth to drive seven GPUs.

The X5680 used in this test, hailed from a large stable of “Westmere-EP” chips, each six cores in credit and cooperate cousins of the “extreme” branded Gulftown” family. All were propelled by Intel’s 32mn “Westmere” die, the shrunken next of kin to the 45nm Nehlam and thus from the master’s timepiece tolled a second sonorous “tick” .

Our final candidate, coveted and criticised with equal fervour is EVGA’s largely forsaken SR-2 follow-up, the SR-X. The system this particular board nobly supported, is the very one I used to type these words and earn my living, so forgive a tendency toward leniency. I consider it a flawed and abused genius, plagued by a plethora of unforeseeable setbacks.

Here is a brief chronology. After playing a fundamental role in the genesis and nurture of the SR2 one of EVGA’s most precious and acclaimed acquisitions, Peter Tan, resigned his position and headed for Asus. The remaining engineers, themselves no slouches, valiantly attempted to apply what they had learned when developing the SRX.

The C606 chipset that occupied the SRX was a server pitched paraphrase of the x79 and most often cited amidst a catalogue of Dual Xeon solutions offered by Supermicro. Gigabyte’s atypical X79s up5 also made use of it and Asus’s Z9PE-d8 harboured the more popular C602, identical save for the absence of a SAS controller. By default, both chipsets were engineered to allocate an equal number of lanes from each processor to attend to their payload of PCI peripherals.

The SRX was different, drawing every lane from the primary CPU and relying on a PEX8747 multiplexing chip to compensate for the diminished bandwidth. It wasn’t enough, and those eagerly populating their SRXs with lane devouring video cards and ravenous raid controllers soon began to encounter costly side-effects.

Disabling useful integrated devices such USB3 headers and Bluetooth became necessary to ensure the board could waddle its way into windows. When accommodating “Sandy Bridge” Xeons this issue was mercifully rare and left the user with ports a plenty with which to proceed. Though barely months had passed before more richly adorned graphics cards such as the GTX Titan, caused the black post screens to return tenfold and necessitate several BIOS updates to be able to function.

It is now I must insist haters be impartial, for this problem was a far reaching one and related to dwindling BIOS address space combined with record levels of RAM surrounding cutting edge GPUs. It wasn’t, isn’t, nor shall ever be specific to the SRX and in fact, caused yours truly to return his Z9PE-D8 due to Asus’s appallingly inefficient support. The links below present unimpeachable proof, not only of when the issue first arose but of the time it took for each company to acknowledge clarify and resolve it.

http://rog.asus.com/forum/showthread.php?27225-ASUS-Z9PE-D8-WS-Issues-detecting-Quadro-K5000-amp-GTX-6XX-series-GPUs-(Q-Code-62)

http://forums.evga.com/Computer-won39t-boot-with-titan-m1872398.aspx

What ultimately earned the SRX such savage negative press stemmed from detriments shared by all comparable platforms and if those divulged were not decisive, there was a double coup de gras to follow. Despite their crippled multipliers, Westmere Xeons, still afforded moderate scope for over clocking and extensive guides to achieving optimum returns, many from proud SR-2 owners, have formed the roots of discussion threads longer than Noam Chomsky’s CV.

The technique involved some diligent adjustments to the base clock and QPI, along with an artful application of Speedstep and Turbo Boost. However, when Intel readied its “Sandybridge” regiment to face the Sun’s anvil, some shrewd and consequential modifications were imposed.

Overclocking was now restricted to the CPUs multiplier and the base clock frequency was a common value ascribed to the PCI-E subsystem, USB and SATA buses in addition to the processor and memory. Why was this so significant? As we have gathered, a Xeon’s multiplier is a locked cause and those in the E5 bloodline were no exception. This meant any hope of extracting a healthy performance bonus depended entirely on the B clock, which because of the nature of its implementation, could only be adjusted in increments of 1 mhz and if pushed more than a handful outside its default of 100, induced a bevy of blue screens.

So there we have it, if the above saga was enough to earn EVGA earn nothing but viscous contempt from the enthusiast, both Asus and even Intel must also be held to account. If there were any valid basis for isolated criticism, it would be to argue that the SRX’s merits as a single CPU platform were overly considered and its PCI Express reserves need not have e been so radically redeployed.

Oh, and EVGA were a bit slow in supplying a BIOS that enabled Ivy Bridge Xeons to “tick”. That’s it folks, three ticks, three mega boards and half a decade of wafer spinning . Turn yonder to see a breakdown of the system I trust will have yielded years of princely service by the time you peruse that which you do…………………………………………………………………………………………………………NOW!

The “Investment” in Order of No Significance.

• Motherboard: EVGA’s sumptuous yet infamous SR-X. An alternative was Asus’s Z9 PE-D8, which all save a handful appeared to favour. Unfortunately, its PCI-E resources had been designated in a fashion which, whilst more effective in maximizing the bandwidth each processor could supply peripherals, made it impossible to exploit more than two video cards in a triple screen “surround” setup. I know, sounds crazy but I had the opportunity to test first hand. There it is, a still from my original post and the experience of a second ill-fated user to substantiate.

The anomaly was manifest in all Dual Xeon hostels sheltering C6XX chipsets, and would have afflicted the SRX had the latter not enjoined a PLX chip to extract all its PCI-E bandwidth from one processor, arguably the board’s most reviled accoutrement, Oh the irony.

The Z9 was built for renderers and research, unless you were a gamer who gernered their pleasures from a 4k paradise and relegated additional displays to 2d duties. Actually, as I write that’s what I am. My personal segue into “3 way surround” promoted nothing but pain. Its mere implementation was like “Take Your Pick” , but with screens instead of boxes.

Click apply, and instantly be plunged into darkness. So which shall grant light first, if any. Come on. I’ve configured all correctly. One display port per card, one screen per display port. Still darkness, is anybody home. Wait! We have light on the left…now the right…no, now the centre and all is dark once again. Ah! At last all three are ablaze but their order awry, hold on 132, 312, 231, 321…..123. You get the picture…or rather you don’t.

• Video Card(s) – Three of Nvidia’s finest almost full fat Keplerians. Could well have been this investment’s most ill-judged aspect. Having entered its phase of cynically enticing enthusiasts with prohibitively priced pixel mashers who’s exclusivities transcended Elysium’s extremes, Nvidia was, with its GTX Titan incurring ire and awe in equal measure. To cut a long pipeline short, the RAM quota, unprecedented at the time and in an era of evolving eye-candy and radiating resolutions.

The chorus of Cuda Cores in their “Kepler” cathedral, derived from a design of industrial excellence and retaining all the crucial characteristics of an ancestry to match. Engineering of astounding quality and finesse, the quiet cooling, the robust physique were all essential in executing the miraculous mixture of potency and stability I solicited.

I wanted these things to last, to spin up and sparkle, to deliver frames by the freight load, leave rendering ravaged, tear texture packs asunder and gallop through games. I yearned for years of dedicated and dependable graphical splendour. I aspired to install and ignore an infallible service. No care, no concern, shove them in, let them shine.

Deep breath. I bought three. Hindsight? Come on doubters, prize those palms from your faces.

Ah! You should have waited for the R9 290X, under half the price, faster, better at 4k. Let me stop you. Hotter, less memory – not hugely significant I’ll concede – three of them, louder, costly watts, higher heat, worthless worries and a wait of over 7 months. Not feasible back then. Saved a stash in the sale of a pair of 680s.

The Titan Trio has yet to fluff a frame. As for the alternatives. 780? Half the memory. 780ti? See above. R295X? To longer delay and my central heating . Titan-Z? Call me a sucker, not a sinner. Besides, If I’d held out till then, my three vanilla Titan’s would’ve undercut a sole Titan-Z. Right, enough now, getting silly.

• CPUs: A pair of core riddled Xeon 2697 V2s – I’m no over-clocker just audio, video, prime and a spot of gaming, but sometimes all at once!

• Coolers – Please play me Chopin’s two Nocturnes opus NH-D14 in air minor. Well, only one candidate could possibly cater for so wilful a waiver of Water World and over-clocking or otherwise, with a double dollop of twelve cores, to economize would eschew logic. Phantek has since composed its own paraphrase, said to be just as impressive.

• Hard Drives: – 4x Samsung MZ-5PA256 (256GB Sata 2) – You couldn’t give those away now, but in 18 months of billowing bytes, not a bit breached the boundaries of its cell.

• Memory – 16GB Crucial 1333mhz ECC RAM – Again stability over speed. Besides, never considered memory a major catalyst for speed, and got sick of “extreme” RAM during the DDR2 era, when sticks would fail more frequently than a mass murderer’s moral compass.

Mini Review Alert!

• Case: – Lian Li PC-80N – Believe it or not, the SR-X barely squeezed in. The PC-P80N was the revised edition of the abhorred and adored PC-P80. The only differences were front USB 3.0 ports (the original’s were 2.0). A reallocation of PSU real-estate (from rear-top to rear-bottom) and a pair of practically appointed front doors and credibly redesigned cages conducive to tasteful, tidy hard drive tinkering.

After all, who hasn’t tethered a twelve inch Titan prior to sparing their SATAS the space to slide in? Not I, but this time, no concern. Flip open a door, loosen a screw, lift a clip and slip the drive in from the front. Lian-Li’s efficient mounting system – the traditional quartet of thumb twisters and rubber rings – makes for a definitive solution. Oh, and the final discrepancy, a brace of new fans sans their blue LEDs, but all 3 pin and motherboard ready.

Overall, it boarded on the flawless. 11 expansion slots, every mould of motherboard heartily hosted, 15 inch video cards warmly…check…coolly welcomed whatever the hour. Radiators and raid arrays more than reasonably provided for. Yet, last time I nipped to NewEgg (on the net), I discover this delightful ase to be deemed defunct. Why? Not as worthy as the 2120b or 2120X, both of which remained available?

Margins too mean? A dying demand? All of the above? I sensed a part of my era had ebbed away but felt content to have clicked when I did.

• PSU – Enermax Platimax 1500W Reams of rails to serve many or one rail to rule all? Yet another contentious issue as yet unresolved. I searched, I read, I pondered, I picked.

EVGA Supernova? Over branded, reviews mixed. Corsair 1200AXI? Single rail but three GPU’s, two CPUs 24 cores, 4 SSDs, 1 HD AND a Blu-Ray…1200 watts and a Platinum placard…should just swing it. No, too risky. How about Antec? No 1500 watt model.

The Silverstone Strider 1500? Looks good, but an octet of rails and only 25 amps on each. Don’t fret, pipe the forums, just use the rails constructively. One rail per pair of PCI-E connectors, 25 amps=300 watts from two 8pins and plus 75w from the slot=375 watts per card – this was before the R9-295X of course!

Sure says I, but what about those 12 core CPUs? 130W each. Uh huh, but neither over clocked AND an 8 pin primary plus a 6 pin auxiliary socket on your motherboard for each?! Go for it! Hmmm. No, to release my restless mind from these rails, a five amp enhancement for each is essential…enter the Enermax alliteration… adeos!

GPUs stutter,

Hard drives splutter,

Frame rates are no longer butter,

But a well chosen case is your friend for life.

As much as am I a nutter…