Three Big Boards.

A tick=a die shrink and a “tock” an architectural transition.

No prizes for guessing the result but what would a benchmark be if not accompanied by ambiguous analytical claptrap. Three cores, three eras and three thunderous strikes from Intel the Devine’s die defining cuckoo clock.

Let’s see where our flagships belong. The quad core QX9775, released in 2008, was one of seven chips to bear the codename “Yorkfield”, and symbolized the summit of Intel’s 45mn “Penryn” design, usurping the 65mn “Kensfields” from the previous “Core” generation to a resounding “tick”.

Casual enthusiasts rarely distinguished the processor from the QX9770 despite its exclusivity to the “Skulltrail” platform. In fact, both these processors and their Xeon counterpart, the x5482, shared identical default speeds, cache, FSB frequency, pin layouts and all else besides several telling discrepancies.

The X5482 or “Harptertown”, was a standard Xeon equipped to function in pairs and bred solely for the server market. By contrast, the QX9770 was a desktop class processor aimed at the enthusiast and armed as was tradition, with an unlocked multiplier.

Though the sockets for each processor appeared identical to the nonchalant eye, the 775, common to all desktop boards of the age, was limited to a TDP of 136 watts and could only operate as a single entity, whilst the 771 soldered to Xeon motherboards supplied a maximum of 150 watts and was keyed with notches several millimetres higher to avoid erroneous installation. The QX9775 aptly combined the Xeon’s socket, higher power envelop and ability to partner up with a prodigious quartet of unencumbered cores.

Intel’s attempt to assemble a package that placated every species of performance hunter came during an era when a hauntingly familiar force commanded as much attention for its motherboards as for its video cards. Nvidia, Old Green Eyes himself, had directly solicited the Intel for a licence that granted chipsets forged within his own domain full compatibility with the core master’s finest creations.

Controversially, Intel readily accepted the proposal on the basis it would be mutual and afford him rights to exploit certain emerald trinkets in return. The deal amounted to each placing a loaded firearm into the palm of a potentially hostile rival and the resultant motherboards became the subject of debates that permeated forums filled with veteran desktop users faster than a forest fire.

The majority of feedback appeared positive until Netburst architecture shifted to “Core”. Then, rapidly, the notorious NForce chipset reaped fame for all the wrong reasons. Roasting north bridges, sluggish SATA controllers, vanishing RAID arrays and unstable over clocks, especially with “Yorkfileds”.

A spate of additional licencing issues led to the Skulltrail’s historical recognition as the first non-Nvidia mobo of the age able to implement Crossfire and SLI which, in a showcase of impish irony, it accomplished by fostering a pair of Nvidia’s bridge chips. Sadly, the Seaberg chipset upon which the X5400 was based, also suffered serious impediments., the worst was its inherent dependence on expensive fully buffered RAM, more scarce and far less flexible than conventional DDR2 and hot enough to necessitate active cooling. Had Intel elected to address this crucial flaw with customary diligence, their design would have cultivated new genre of motherboard.

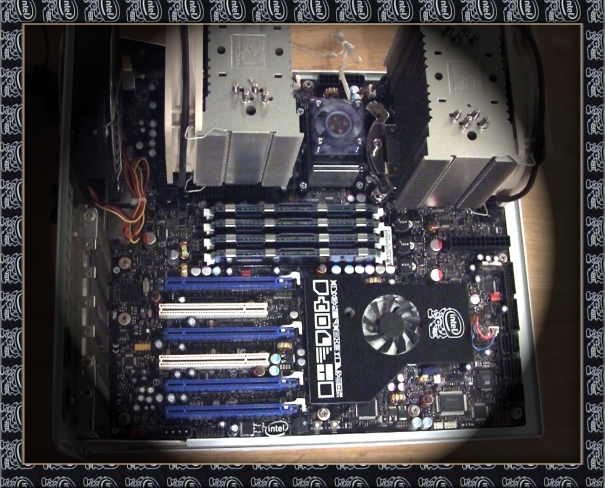

If customer feedback dictated the outcome of this comparison, EVGA’s SR2 would waltz over the line. To early adopters who’d anticipated the “Skulltrail” would spawn a sequel and others poised to poach the pleasures of a industrial grade toy for the tireless tweaker, it was the only feasible remedy and despite having to manipulate vanilla Xeons in a state of lock-down, used its barn-burning B clock, high performance DDR3 DIMMS and Intel’s modest “Turbo Boost” allowance to attain spectacular results. Like the D5400XS, two Nvidia bridge chips – the later NF200 – effectively doubled its reserve of PCI Express lanes and provided enough bandwidth to drive seven GPUs.

The X5680 used in this test, hailed from a large stable of “Westmere-EP” chips, each six cores in credit and cooperate cousins of the “extreme” branded Gulftown” family. All were propelled by Intel’s 32mn “Westmere” die, the shrunken next of kin to the 45nm Nehlam and thus from the master’s timepiece tolled a second sonorous “tick” .

Our final candidate, coveted and criticised with equal fervour is EVGA’s largely forsaken SR-2 follow-up, the SR-X. The system this particular board nobly supported, is the very one I used to type these words and earn my living, so forgive a tendency toward leniency. I consider it a flawed and abused genius, plagued by a plethora of unforeseeable setbacks.

Here is a brief chronology. After playing a fundamental role in the genesis and nurture of the SR2 one of EVGA’s most precious and acclaimed acquisitions, Peter Tan, resigned his position and headed for Asus. The remaining engineers, themselves no slouches, valiantly attempted to apply what they had learned when developing the SRX.

The C606 chipset that occupied the SRX was a server pitched paraphrase of the x79 and most often cited amidst a catalogue of Dual Xeon solutions offered by Supermicro. Gigabyte’s atypical X79s up5 also made use of it and Asus’s Z9PE-d8 harboured the more popular C602, identical save for the absence of a SAS controller. By default, both chipsets were engineered to allocate an equal number of lanes from each processor to attend to their payload of PCI peripherals.

The SRX was different, drawing every lane from the primary CPU and relying on a PEX8747 multiplexing chip to compensate for the diminished bandwidth. It wasn’t enough, and those eagerly populating their SRXs with lane devouring video cards and ravenous raid controllers soon began to encounter costly side-effects.

Disabling useful integrated devices such USB3 headers and Bluetooth became necessary to ensure the board could waddle its way into windows. When accommodating “Sandy Bridge” Xeons this issue was mercifully rare and left the user with ports a plenty with which to proceed. Though barely months had passed before more richly adorned graphics cards such as the GTX Titan, caused the black post screens to return tenfold and necessitate several BIOS updates to be able to function.

It is now I must insist haters be impartial, for this problem was a far reaching one and related to dwindling BIOS address space combined with record levels of RAM surrounding cutting edge GPUs. It wasn’t, isn’t, nor shall ever be specific to the SRX and in fact, caused yours truly to return his Z9PE-D8 due to Asus’s appallingly inefficient support. The links below present unimpeachable proof, not only of when the issue first arose but of the time it took for each company to acknowledge clarify and resolve it.

http://rog.asus.com/forum/showthread.php?27225-ASUS-Z9PE-D8-WS-Issues-detecting-Quadro-K5000-amp-GTX-6XX-series-GPUs-(Q-Code-62)

http://forums.evga.com/Computer-won39t-boot-with-titan-m1872398.aspx

What ultimately earned the SRX such savage negative press stemmed from detriments shared by all comparable platforms and if those divulged were not decisive, there was a double coup de gras to follow. Despite their crippled multipliers, Westmere Xeons, still afforded moderate scope for over clocking and extensive guides to achieving optimum returns, many from proud SR-2 owners, have formed the roots of discussion threads longer than Noam Chomsky’s CV.

The technique involved some diligent adjustments to the base clock and QPI, along with an artful application of Speedstep and Turbo Boost. However, when Intel readied its “Sandybridge” regiment to face the Sun’s anvil, some shrewd and consequential modifications were imposed.

Overclocking was now restricted to the CPUs multiplier and the base clock frequency was a common value ascribed to the PCI-E subsystem, USB and SATA buses in addition to the processor and memory. Why was this so significant? As we have gathered, a Xeon’s multiplier is a locked cause and those in the E5 bloodline were no exception. This meant any hope of extracting a healthy performance bonus depended entirely on the B clock, which because of the nature of its implementation, could only be adjusted in increments of 1 mhz and if pushed more than a handful outside its default of 100, induced a bevy of blue screens.

So there we have it, if the above saga was enough to earn EVGA earn nothing but viscous contempt from the enthusiast, both Asus and even Intel must also be held to account. If there were any valid basis for isolated criticism, it would be to argue that the SRX’s merits as a single CPU platform were overly considered and its PCI Express reserves need not have e been so radically redeployed.

Oh, and EVGA were a bit slow in supplying a BIOS that enabled Ivy Bridge Xeons to “tick”. That’s it folks, three ticks, three mega boards and half a decade of wafer spinning . Turn yonder to see a breakdown of the system I trust will have yielded years of princely service by the time you peruse that which you do…………………………………………………………………………………………………………NOW!