The “Kepler” Rides Out – The Benchmarks.

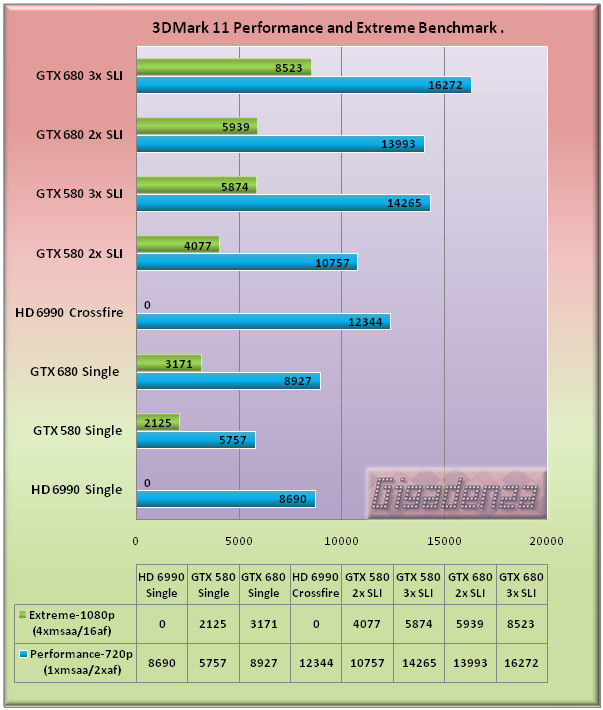

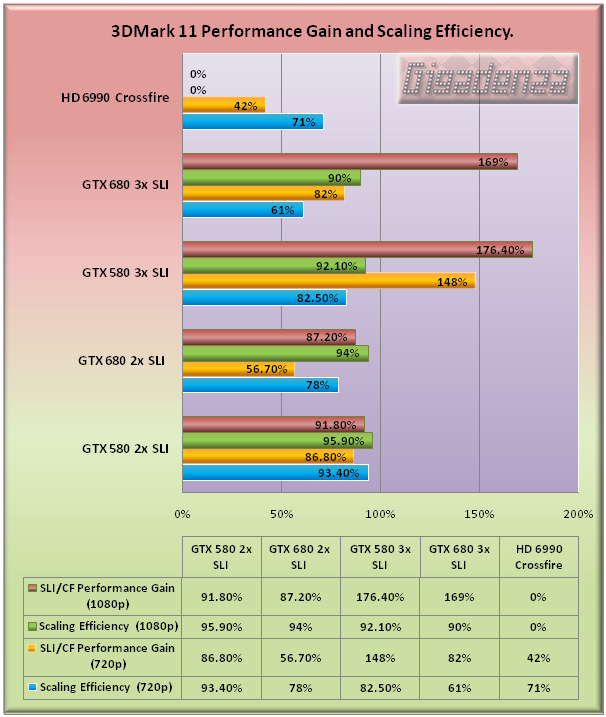

Test 1 – 3DMark 11

Since 3DMark Vantage is beginning to struggle to fully exploit the processing power offered by the latest multi-GPU setups, 3dMark 11 will, this time around, be included in favor of the former.

Unfortunately, there was no chance to conduct the “Extreme” benchmark on the HD 6990 but in examining the results it remains apparent that Nvidia’s quest for energy efficiency has in no way led to a compromise in performance. Just look at that! Even a single gtx 680 is enough to nose ahead of AMD’s dual GPU monster, which itself consumes over 150 watts more under load.

With the scores being so close, we must consider that the use of AMD’s most recent drivers might well have influenced a different result and that the absence of the HD 7970 from these tests is making Nvidia’s first 28mn card appear especially economical on power. Nevertheless, this is a very inspiring start for the GTX 680, whose score in 2 way SLI is virtually equal to that of three 580s in the “performance” benchmark and fractionally higher than the latter in the “Extreme” test.

I wonder how long it will be before 3dMark 11 can’t prevent video cards from forming a disorderly queue behind the CPU. There are certainly early warning signs in the “performance” test with two 680s yielding only a 56.7% gain in comparison to the 86.8% increase afforded by adding a second 580. This conservative bonus is reflected in the 680’s scaling efficiency, which is lower than the 580 in both 2 and 3 way SLI, despite significantly higher scores in all the tests.

However, as soon as we switch to the “Extreme” benchmark, the 680s are assigned a workload worthy of their capacity and exhibit the same breathtaking performance boosts and scaling as the 580s.

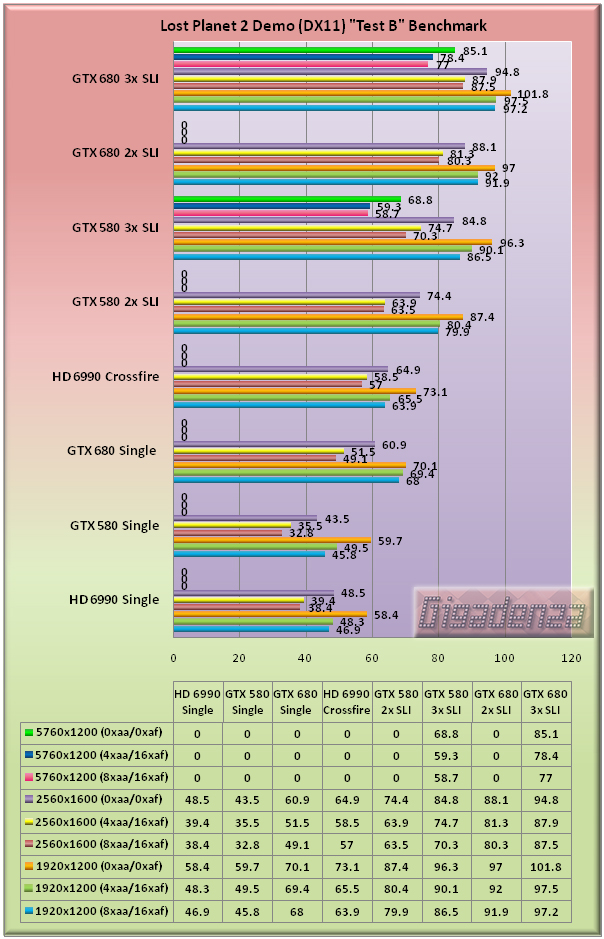

Test 2 – Lost Planet 2

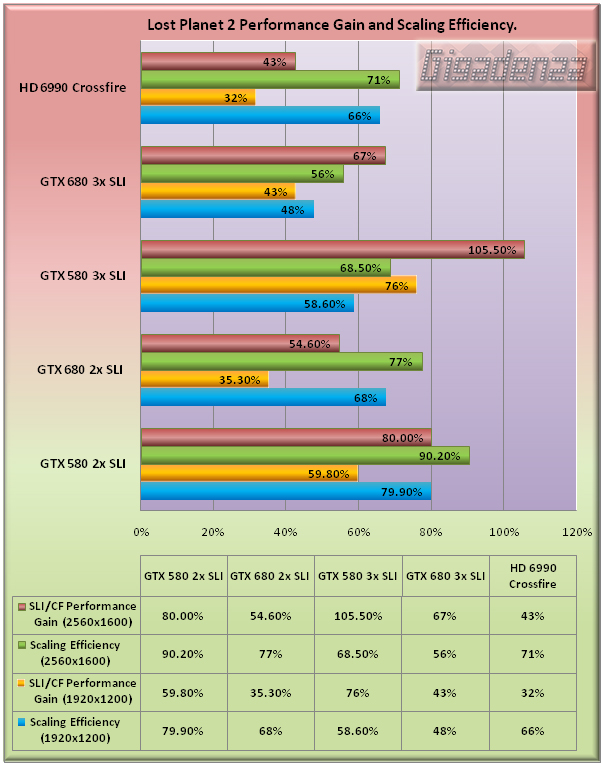

Lost Planet 2 provides two graphically arduous tests enriched with numerous DirectX 11 specific routines including advanced shading techniques, highly detailed texturing and abundant tessellation. Here we focus exclusively on test B, the one with the massive, crawly thing!

Quite a lot of information to digest! The multi screen tests at 5760×1200 would have been possible on a single HD 6990 and 680 though for the 580, a minimum of two cards is required. As there was no opportunity to obtain results from the 6990 at this resolution, only the 580 and 680 were bench marked and in each case, to keep things consistent, only in 3 way SLI.

Once again, a single GTX 680 has the legs on a 6990 and this time by a comprehensive margin, regardless of the resolution or quality enhancements. It also improves greatly on the 580’s performance, with a notably increasing lead as we move from 1920×1200 to 2560×1600 and/or apply eye-candy. Also note the consistent edge that two 680s have over three 580s.

One less card, considerably less electricity, yet superior performance. Mission accomplished Nvidia.

Oh dear me. THIS IS TOO EASY! GIVE US SOMETHING HARDER! Yelled the frustrated little video cards as the old CPU huffed and puffed. There can be no doubt that in this case, a resolution of 1920×1200 was simply not enough to adequately exploit the power of two 680s in SLI. Only 35% more speed with a scaling efficiency of 68%. By contrast, look at how much harder a pair of 580s were working at the same resolution, around 12% per card, resulting in a much healthier performance increase of 59.8%.

Moving up to 2560×1600 gives us a stronger reason for investing, a speed gain of over 50% with each card flexing 77% of its muscles.

With three 680s, even at 2560×1600, you only get around 13% more speed than with two, which for an additional £400, is surely impossible to justify. Had the test system featured an insanely overclocked Sandybridge-E CPU such as the 3960X instead of the stock 980x I had available, the benefit of SLI would have undoubtedly been more significant. However, I still question whether three cards offers a worthy advantage to any other than those looking to unite them with a multi-screen setup.