Well, the forecasts may have lacked authority, but this time, they were wholly accurate. Nvidia’s apparent stubbornness to disclose any firm details regarding its latest hand caused one notable hardware review site to break the “unofficial” NDA and post its extensive article half a day early. Aside from frustration, one must assume that their decision to jump the gun, hinged on the difficulty Nvidia might have in accusing them of violating an NDA which never officially existed, despite allegedly “countless emails” in efforts to establish one.

Perhaps one positive to emerge from all this anger and confusion is that, as with previous bona fide hardware launches from AMD and NVIDIA, once again, the goods themselves arrived on time – so to speak – and in plentiful supply. The age of tedious “paper” launches, when the testing and review phases would take place weeks, sometimes months before frustrated customers were able to taste of the fruit has passed and shows no sign of a renaissance.

With the multitude of review and benchmarking sites now erupting across the internet, many created and nurtured by hopelessly addicted enthusiasts for nothing but a love of PC technology, we are as close to a level playing field as ever.

The big hitters may still get an early and enticing glimpse of the “next episode” but they can only break silence a day or two before less illustrious, yet equally worthy contemporaries. Thus, nearly all potential customers can base their decisions on the broadest possible range of opinions from the very beginning and with less risk of biased previews or inaccurate test results influencing them.

With all of the above in mind, let’s take a look at what materialised in a large brown box on the morning after the night before.

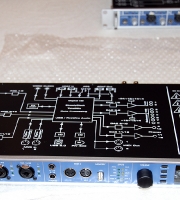

As with the “Fermi” based 5xx series, Nvidia’s priority with the “Keplar” which is broadly speaking, a fully refined Fermi, is energy efficiency. In examining the diagrams and table below, we can observe the more significant alternations the company has made to what many at first claimed was a terminally flawed design.

Both the Fermi and Kepler are based around four parallel GPCs (Graphics processing clusters), each of these clusters contains its own streaming multiprocessors (SMs). In the case of the Fermi, there were four SMs per cluster making a total of sixteen (four clusters times four SMs) while the Kepler has just two per cluster, making a total of 8.

However, each of these streaming multiprocessors in turn contains their own CPCs (cuda processing cores). The Fermi’s SMs each had 32 CPCs, giving us a total of 512 – remember, 4 GPCs times 4 SMs times 32 CPCs = 512, but for the Kepler, a chip which sports over 3.5 million transistors, Nvidia has introduced a new generation of streaming multiprocessor (known as SMX, or Streaming Multiprocessor Xtreme) in which the number CPCs for each has been increased sixfold to 192! This gives an incredible total of 1536 Cuda Processing Cores crammed into to half the number of streaming multiprocessors. Perhaps now we can begin to understand just how Nvidia has managed the feat of an enthusiast grade video card which dips below the 200W TDP barrier.

One area in which Nvidia’s single GPU cards have been lagging behind ATI (AMD) for a while is in their ability to drive multiple monitors. Even a 2 1/2 year old HD 5870 is enough to give the user out of the box support for up to 3 individual screens (provided that two have display port inputs) as well as multi-screen gaming action via ATI’s Eyeinifity system.

A GTX 580 by contrast, despite emerging some months later, is limited to just 2 screens per card, affording the user no opportunity to exploit Nvidia’s rival multi-screen implementation, “3D surround”, until a second card is added.

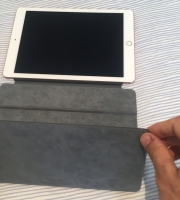

Those who have remained loyal to AMD for this reason can at long last reconsider their allegiances, since a single GTX 680 is able to drive a total of four monitors and fully supports “3D Surround”. Moreover, users fortunate enough to own four screens can designate three as their gaming real estate, while the fourth can be simultaneously assigned to display any desktop applications including web pages, documents and photos, HD movies, online TV and entertainment services and social media meanderings!

Though it might be difficult to argue that AMD doesn’t continue set the standard, given that their 7970 can accommodate a hoard of six displays, two MST hubs are needed to achieve this and without these, the limit is also four.

In addition, as with the 7970, Nvidia users now also no longer require their monitors to be capable of identical resolutions, refresh rates, or have the same physical connectors, unless that is, they are looking to game in 3d. Hence, it is a thoughtful and welcome addition and will likely tempt a clutch of floating voters that might otherwise have opted for the red team, at least, once they have also seen the performance figures!

Sticklers for eye candy will be intrigued by two more of the 680s features. The first is a new incarnation of “temporal” anti-aliasing called “TXAA”. It is said to combine the speed of FXAA (fast approximate anti-aliasing) with the visual quality of multi sample anti-aliasing (MXAA) by employing similar techniques to those observed “in CG films”, so says Nvidia.

There are two implementations of the algorithm, TXAA1 and TXAA2 . TXAA1 is claimed to match the quality of 8x MXAA but delivers it at the speed of 2X MXAA while TXAA2 offers superior visuals to 8x MXAA but is able to process it as fast as 4x MXAA.

The second feature is a novel form of V-Sync known as adaptive V-Sync. This dynamically engages v-sync as soon as an application’s frame rate exceeds the monitor’s refresh rate, whist at all other times the function remains disabled.

A game running at an average of around 60FPS on a monitor with a refresh rate of 60hz will no longer be instantly limited to 30FPS/30HZ (the next multiple down from 60) as soon as it dips below that average. Moreover, the familiar shearing and tearing of images that would, in this scenario, be vividly evident as soon as the frame rate went above 60 FPS, is now nullified by virtue of v-sync’s intervention at that precise point. In short, no stuttering – formerly a v-sync related issue – and no image tearing – formerly a symptom created by the absence of v-sync.

As with AMD’s “Powertune” feature, first introduced on their 6990, the 680’s GPU frequency is, to an extent, dictated by its TDP of 195w. If this limit is approached, the speed is reduced accordingly, ensuring the card remains within its TDP at all times. Ambitious tweakers will therefore be delighted by the facility to manually adjust this limit over a range of 62% (32% above the default and 30% below it) effectively increasing the card’s TDP to just over 250W!

Finally, we have Nvidia’s interpretation of dynamic overclocking, “Boost Clocks”. Closely resembling Intel’s CPU “Turbo Boost” feature, as soon as a heavy demand is placed upon the GPU, it will automatically trigger an increase in speed and significantly, voltage. These increases are not fixed values but rather, progressive calculations based upon the card’s temperature and power consumption at any given moment.

By default, the GPU’s clock speed when running in 3d mode begins at 1006mhz, this is the “base clock”, or minimum guaranteed frequency for the vast majority of 3d applications. From here, depending on the conditions, the speed will be increased to an average “boost clock” frequency of 1058mhz, although the further and longer the card remains running below its TDP and the GPU’s temperature below its thermal protection limit of 98 degrees, the more regularly and notably this speed will be exceeded. Meanwhile, the core voltage typically operates over a range of 1.075 to 1.175v and as one might expect, is entirely dependant on the GPU’s speed.

Advanced overclockers need not panic, since both the base and boost clock frequencies can be manually increased. They will however need to bear in mind that no matter how high these values are set, there is an unavoidable incremental speed penalty of around 40mhz that is applied as the GPU’s temperature rises to its maximum.

For those wanting to view further amd higher quality photos, The 680’s gallery can be viewed here.

Outer Packaging.

Zotac’s interpretation of the GTX 680 bears the vendor’s typcal, stylish black and gold livery and a highly tempting, albeit cliched slogan on the box.

Outer/inner packaging (like Russian Dolls?!)

It should be noted that Zotac offers an extended 5 year guarantee with their card, two years more than any rival manufacturer I’ve come across.

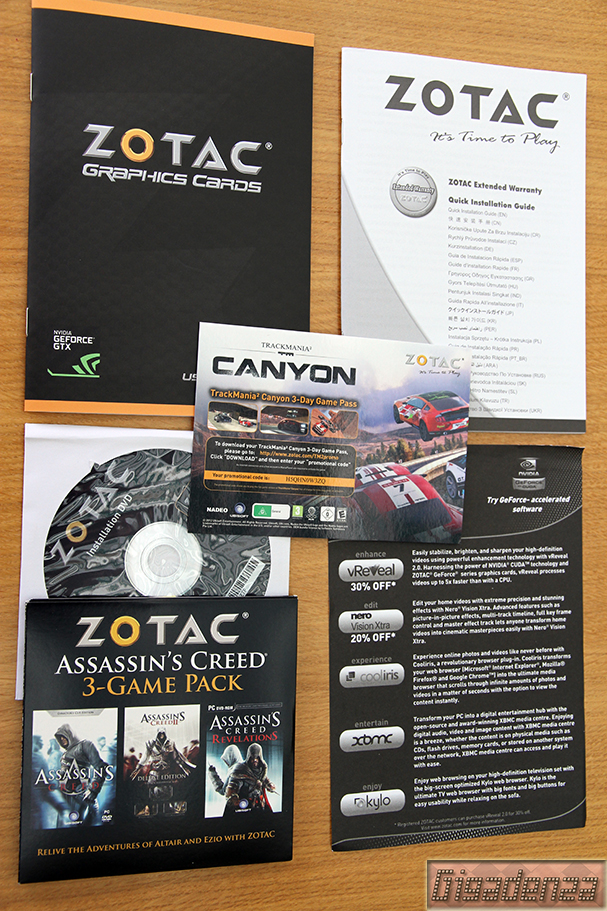

Inside the box.

Well, there is is. After such a long wait one could be forgiven for anticipating a radical departure from the norm in terms of physical appearance but here, the magic lies under the bonnet.

The card itself.

It was in 2006 that ATI first introduced the front intake fan as part of the stock cooling solution for their x1950. Nvidia swiftly followed suit and refined the design for the 8800gtx. Such vivid memories make its continued presence strangely reassuring. As an air cooling system in traditional ATX cases, it could still be said to work far more efficiently than any non-standard or after market alternative, including many of the dual fan solutions found on factory overclocked cards which tend to be louder and exhaust a large portion of hot air inside the case.

The combination of a “squirrel cage” type fan, a shroud that completely covers the card’s heat sink and a large vented area on the backplate has always been extremely effective in immediately drawing on cool air supplied by a case’s front intake fans whilst simultaneously “pushing” any hot air generated by the GPU and memory directly out of the case before it has the chance to rise inside it and heat up other components. Until we see fundamental alterations to motherboard or case designs, it’s difficult to imagine AMD or Nvidia will deviate from a system that “ain’t broke”.

Right side view.

Left side view.

Like the HD 7970, the GTX 680 supports the latest, third generation in PCI Express architecture, which effectively doubles the bandwidth each motherboard slot has available. This does not mean that those without PCI-E 3.0 motherboards will suffer any compatability issues or performance penalties since the earlier generation of PCI-E has already been shown to deliver virtually identical speeds when running the 680, even in SLI configurations. There may be a more noticable bottleneck if, say, one were to run 3 cards in three PCI-E 2.0 x8 slots instead of a x16 x16 x16 or x16 x16 x8 three way setup, though even this scenario is unlikely to trouble any bar the most obsessive frame rate junkies. Examining the left side of the card we can identify two 6-pin PCI-E power connectors, again emphasising the lower wattage required by the GPU. Its 195W TDP is comfortably served by the motherboard’s slot and these two connectors, all of which give a combined total of 225 watts (3×75). Nvidia insists on a PSU with a minimum rating of 550 watts, capable of supplying at least 38 amps on the 12v rail, a modest requirement and no doubt great news for all that have endured the monstrous demands of previous cards and don’t want to consider yet another PSU upgrade!

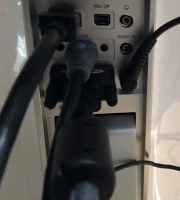

The card’s backplate.

The addition of a display port connector (on the bottom left) has sparked a slightly different design from the 580. The two DVI connectors (top right=DVI-D, bottom right=DVI-I) are now “stacked” vertically and the vented area that used to cover the entire width of the backplate is a little smaller. There is an additional and very small vent in between the HDMI connector (bottom middle) and DVI-I port, evidently, Nvidia did not want to compromise on cooling!

Documentation and software.

Zotac includes three games from the ever popular Assassin’s Creed series and a “Trackmania Canyon” 3 day game pass which can be activated online. Also included are two molex to PCI-E converters and a VGA to DVI adapter, neither of which is likely to apply for much longer!

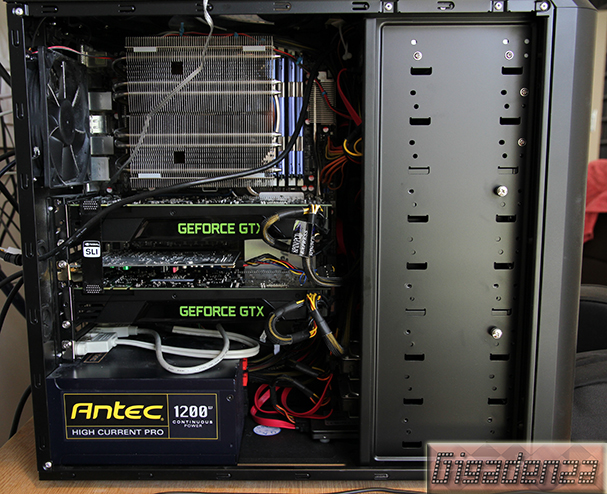

Two GTX 680’s installed in an Antec 902 (V3) Gaming case.

At a little under 10 inches (1/2 an inch shy of an GTX 580 and almost a full inch shorter than the HD 7970), the 680 should be a trouble free lodger in virtually any typical mid or full size tower, indeed, most modern micro ATX cases aimed at the marginally keener gamer should also be enough for roomy accommodation. Fellow techies will appreciate the sense of satisfaction and relief one feels when an upgrade is easier to fit than whatever its replacing!

Just to remind readers, please visit my Gallery Section for additional and higher quality photos of this and other products.