In May 2015 the adorably abominable baron von Intel shrewdly surveyed a billowing binary horizon scattered with ever more swift and spacious collators of digitally interpreted humanity. Cracking his sparkling silicon knuckles for 10 fleeting nanoseconds he decided the time had come to marry sublime solidarity to mollified volatility and boldly blitzed us with a brand new breed of exceptionally brisk repositories .

The technical roots of NVME (Non-volatile memory express), date back to 2009 and were the fruits of a consortium composed of over 90 companies. From 2010 to 2013, the design underwent three revisions and received its maiden commercial implementation in 2012, courtesy of Integrated Device Technologies.

Its primary purpose was to promote a definitive platform for data conveyance in an era when solid state devices stood poised to become the industries’ preference over their revolving ancestors and ultimately, to displace AHCI an ageing and progressively cumbersome interface which had been conceived with only the latter in mind.

Two years later, Soule Based electronics empire Samsung turned to the OEM market and gave benchmark famished reviewers the enviable opportunity to dither and eulogize over the benefits of this ground breaking medium, in the guise of its XS1715, an enterprise class product capable of read rates in excess of 3 Gigabytes per second, and write speeds approaching 1.4.

Mere months later, Intel resoundingly entered fray and, just as his initial venture into the world of “stationary” archiving had solidified new standards, so too did these polished, placid paraphrases transcend the powers f all their contemporaries.

His scintillating synergy of NvMe blessed benefactors totalled no less than eight incarnations. These were divided into four categories according to their I/O credentials, with two models per category, a half-height PCI-E card, and a standard 2.5” drive which in turn, were available in several capacities.

The P3500, P3600 and P3700 product lines were high-end business solutions, targeted at cutting edge data centres, professional content creators, rich companies seeking to justify inflaming budgets through sundry projects requiring boundless banks of servers, people who referred to their computer as a workstation, or insanely profligate enthusiasts who considered “professional” to be a redundant term in an age of self-financed fantasies.

By contrast, The 750 was a consumer engineered variant with a lower life expectancy but superior performance for the price.

The traditional NVME SSDs fostered a connector which was visibly indistinguishable to that observed on prototype SATA express products such as Asus’s Hyper Express, an enclosure designed to integrate two m.2 modules and a hybrid drive from Western Digital’s top of the range “Black” series that combined a 4tb mechanical disk and a 128GB SSD. The SFF-8639, hardly a memorable moniker, catered for faster throughput by using previously unallocated pins to exploit additional PCI express lanes, raising the total number from two to four.

In order to accommodate standard NVME drives, the host system required a MiniSAS or SFF-8643 port, until then, an almost exclusive feature of extravagant RAID controller cards and server grade motherboards. To resolve this, several forges of masterful peripheral hospitality hatched reworked BIOSES and a nifty adaptor to convert their board’s M.2 slots to MiniSAS sockets. Asus’s creation, entitled the “Hyper Kit, came bundled with its TUF Sabertooth X99 board and was available as an optional upgrade to owners of other Asus boards incorporating Intel’s Haswell-E chipset.

MSI were quick to follow with their M.2 to turbo MiniSAS converter, which, somewhat more generously, was enclosed with every one of the vendor’s x99 boards from June 2015 onwards and could also be purchased separately to install in any Hawell-E board equipped with an M.2 socket.

Officially, the slots needed to be wired to four third generation PCI-E lanes, though the latter requirement was only essential if one wanted to be certain of optimum transfer rates.

Assuming manufacturers had released BIOSes that contained the necessary modifications, there was no technical reason for these adaptors and their associated SSDs to refuse to function when used with any PCI-E M.2 slot regardless of type, or the number of lanes available.

For example, Gigabyte’s SOC Force x99 drew its NGFF sustenance from the chipset (PCH) in order to allocate all of the CPU’s bandwidth to the board’s PCI-E slots, thus, its M.2 socket, though 4 lanes wide, only supported second generation speeds and was hence capped to a maximum of 500 megabytes per lane and a total of 2.0 gigabytes per second. For two lane M.2 slots, the limit decreased 1000 megabytes per second.

With nascent NVMe drives showcasing typical read/write rates in excess of 3.0 and 1.4 gigabytes per second respectively, this was not sufficient to reap the full benefits of the protocol. Therefore, those without the choice a third tier NGFF socket, were better off opting for a card moulded drive and even then, there were further vexatious dilemmas to consider.

Boards constructed around Intel’s mainstream chipsets such as the Z-77, 87 and 97, were each assigned a quota of 16 3rd generation lanes over which to carry data to and from the PCI-E subsystem. If the user elected to install a high end video card in one of the slots, all 16 lanes could either serve it exclusively or be divided amongst additional peripherals in portions of 8 or 4. As the NVMe SSDs occupied 4 lanes, this negated the possibility of SLI, since Nvidia’s pixel preachers demanded a minimum of 8 lanes in order to render concurrently, though a Crossfire setup composed of two AMD cards feeding off 8 and 4 lanes, coupled with an SSD that exploited the remaining four, was a viable alternative.

For a mainstreamer whose motherboard harboured our magical multiplexor, the PLX chip, that effectively doubled the number of lanes through scrupulous PCI traffic management, this was not an issue. Regrettably, not all were wealthy or wily enough to account for their future desires.

Confounding Connections

To elevate confusion to stratospheric heights, the diagrams on the left depict the cable/backplane receptacle that each type of drive connects to as opposed to the connectors located on the drives themselves. For heavens sake, peruse this image with the utmost scrutiny (for it took me three hours to compile) and check that the motherboard or controller card you are intending to use supports the type of drive you are attempting to connect. Below is the transcript of a useful conversation I conducted with myself.

Q. May I connect a SATA drive to a SAS port?

A. You may indeed, and operations should proceed as though it were none the wiser.

Q. Could I plug a SAS drive into a SATA port?

A. Or course you could, but no reads or writes shall it execute, despite appearing most attractive.

Q. Would it pay to hook a SATA drive to one port of a SATA Express endowed motherboard?

A. It certainly would, SATA express ports can double up to warmly welcome SATA drives when necessary.

Q. How about hooking a SAS drive to one SATA socket of a SATA Express connector?

A. The diagrams I have observed indicate the pins assigned to PCI-E lanes one and two of the SATA Express interface double up as SAS ports A and B. Moreover, both device connectors are interchangeable. You can plug the drive end of a SATA Express cable into both a SAS and SATA Express drive, but not into an ordinary SATA drive. Though Sata Express utilizes PCI lanes to transport data, it does so via the SATA bus and two standard SATA ports hence in theory, a SAS’s platters should spin to win. Nonetheless, sans first hand evidence, I wouldn’t stake my miniscule reputation on it.

Q. Might I suggest connecting a SAS drive to a motherboard’ NVME/SS-8639 port either directly, if the board has one as standard or via an M.2 to SS-8639 converter if it only features an M.2 slot.

A. An inspired and logical proposition. Though to yield success, the motherboard’s SS-8639/M.2 port would need to wired to the both the SATA and PCI-E buses.

Q. Could it hurt to marry an NVme drive to a SATA Express port?

A. Not in the slightest, though a worthy consummation would not be forthcoming. The speed would be bound by the bandwidth of the interface, which at best would be 2 gigabytes or 16 gigabits per second at best, but most likely 1 gigabyte or 8 gigabits per second. In other words, a waste of an exorbitant wedding.

Bandwidth Bemusement

Having already elaborated on the repugnantly contradictory figures relating to the theoretical and actual throughput of PCI-E’s progressive implementations, this paragraph seemed a fitting point to throttle some infuriatingly stubborn loose ends.

Every incarnation of PCI was subject to what the industry jauntily jargonized as “overheads” which, quite apart from instilling fear in the soul of every entrepreneur’s accountant, compromised the capacity of the interface. These encumbrances were enforced by the imperfect encoding techniques used to exchange data between components.

PCI-E 1.0, 2.0 and SATA utilized an 8 to 10bit procedure, where every 10 bit transmission resulted in only 8 bits of data being broadcast, thereby incurring a net performance loss of 20%. PCI-E 3.0 introduced a much more efficient 128 to 130 bit scheme, which delivered 128 bits of data for every 130 bit transmission and generated a vastly reduced penalty of 1.54%.

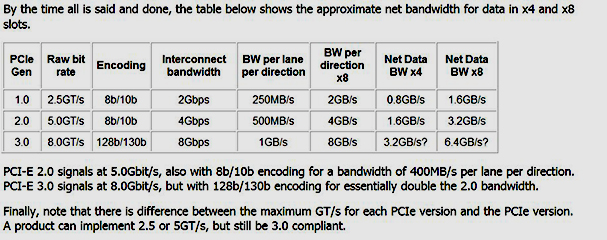

Considering reviewers, manufactures and pundits were notoriously wayward over which values they divulged in specifications, articles and amidst feverish forum fanaticism, the table above is intended to illustrate the correlation of raw data rates to those after the overheads have been deducted

How I pray for the day when neatly framed figures terminally nullify aggravating confusion, for attempting to do so in words is a tricky as reciting Shakespeare in Hebrew, and as pointless as reciting Shakespeare in Hebrew…..backwards.

This analysis of the overheads coincides with the formulae applied in my table. For PCI-E 1.0, 2.0, and 3.0 the figures quoted prior to encoding are 2.5, 5.0 and 8.0 giga-transfers (GT/s) per second respectively for each individual lane. Gigatransfers equate to gigabits, hence these figures can also be interpreted as 2.5 5.0 and 8.0 gigbits per second.

One byte equals eight bits, so to procure the values in bytes, we divide each number by eight, giving us 312.5 megabytes per second/lane for PCI-E 1.0, 625 megabytes per second/lane for PCI-E 2.0 and 1000 megabytes or 1 gigabyte per second/lane for PCI-E 3.0.

Next, we subtract the overheads. 20% taken from 312.5 (for PCI-E 1.0) leaves 250 megabytes or 2000 megabits (8 times 250) or 2.0 gigabits per second/lane. For PCI-E 2.0, the equation is the same, 20% from 625 gives us 500 megabytes or 8000 megabits (8 times 500) or 8.0 gigabits per second/lane while for PCI-3.0, with its refined encoding method, we subtract 1.54% from 1000, leaving us with 984.6 megabytes per second/lane.

Complex, but just about digestible, do we dare glance at the table below?

Let’s try to establish what each column defines and whether or nor the pre and post overhead values are consistent with those listed above.

PCI-E Gen – The generation of the PCI-E interface.

Raw Bit Rate – The data transfer rate per lane in each direction before encoding (in Gigatransfers per second).

Encoding– The encoding scheme employed for the interface.

Interconnect Bandwidth – The data transfer rate per lane in each direction after encoding (in gigabits per second)

BW per lane per direction – The data transfer rate per lane in each direction after encoding (in megabytes per second). This value is obtained by dividing the number in the fourth column by eight

BW per direction x8 – The data transfer rate of an 8 lane slot in each direction after encoding (In gigabytes per second). This value is obtained by multiplying the number in the fifth column by eight.

In the case of PCI-E 3.0, the values in columns 4, 5 and 6 have been rounded to the nearest gigabit/gigabyte due to the overhead of 1.54% being meagre enough for a ravenous corporation to disregard.

Net Data BW x4 & x8 – Net data bandwidth for a x4 and x8 slot in each direction. In mathematical terms, these values are obtained by multiplying the numbers in the fifth column by four or eight (depending on the number of lanes) then subtracting 20% from each result. However, this deduction evidently cannot relate to the encoding penalties as those have already been accounted for.

Note that for PCI-E 3.0, which is only supposed to incur a 1.54% deficit, the anomaly is repeated, with a further 20% going missing in these final two columns for no apparent reason.

Note also that the stated capacity of a single PCI-E 2.0 lane is 400MB per second instead of 500MB as disclosed in in the table, again, 20% less than the value that has already allowed for the overhead and therefore, 40% lower than the lane’s raw bit rate.

I have absolutely no idea when these figures were published, where they fit in, or why they even exist. Painstaking research revealed no explanation, though many sources appear to calculate PCI-E bandwidth in accordance with this formula. Should you stumble upon the critical reason, don’t call me, just subtract an additional 20% from every “post overhead” value listed in my bloody table!

Returning to NVME.

Several aspects of this supremely efficient protocol made it worthy successor to AHCI, which, after a decade of faithful service, stood poised to reveal its age. The most momentous enhancement was without question, its ability to process command queues of fantastically greater depth in parallel. Whereas ACHI was limited to a single queue of 32 commands, NVMe was capable of addressing over 65000 queues, each over 65000 commands in length.

For a fantastically crude but entertaining analogy, consider a customer at your local supermarket with 32 items in his trolley and a loan member of staff to swipe his groceries across the machine that goes *BEEP*.

Directly behind is another figure, a disgruntled lady in a desperate hurry, two baskets stuffed with an amalgam of condiments, vegetables and ready meals, also totalling 32 and after her, a second gentlemen, trying his dandiest to be patient but in truth, most aggrieved of all due do his irrational desire to ingest 32 packets of Ginger nuts. Enter the store manager, Mr Norman Virtue Morris Evans (Nvme for short).

“Don’t worry”. He assures. “Today we a setting in motion an inspiring new initiative. No doubt you’ll be familiar with our rival’s hollow promises to open a new checkout whenever a customer is forced to wait in line. Well, when we make such pledges, we provide, and that’s putting it mildly.

Recently, one of our most illustrious benefactors, a Mr. Von Intel, made an extremely generous donation, on the strict stipulation that every penny, pound, dollar and dime was invested to ensure a spiritual shopping experience for our priceless clientèle. It is thus with pride that I announce, in approximately one hour, every aisle in this supermarket will be adorned with the richest repertoire of produce imaginable. Choices so abundant you shall shed tears of dumbfounded delight. A tea for every hour of the day.

A coffee for every sentence of an agitated email. Cupcakes where before there were no cups. Non-alcoholic potatoes, genetically reformed bananas. Sugar free cereal, cereal free cornflakes, Paleo friendly porridge, free range mineral water, big fat cheese, thin carbohydrate butter, fractionally lower than medium protein milk. Gluten-absent, farm reared chocolate and openly organic baguettes.”

As you’ll have probably guessed, this futile variety of foodstuffs and the shelves it populates, are metaphors for hard drive’s data and memory chips.

“With such an abundance of choice,” continues Norman, bristling with enthusiasm. “One extra checkout would scarcely suffice, which is why, as of 11 o clock this morning, we’ll be opening precisely 65535.”

As you’ll have gathered by now, these checkouts allude to the number of simultaneous command queues an NVMe drive can attend to.

“And how about a special bonus” Norman went on, addressing our three faithful foragers. “Why don’t you go grab one of our swanky new trolleys over by the entrance? You may find them rather awkward to begin with, their proportions a trifle large for comfort, but trust me, this whole project would have worthless if we’d stuck with the old design. Most importantly, there’s not a wobbly wheel amongst them. Now here’s a challenge I know you’ll love. Each of you decant your 32 purchases to a trolley, then abandon civility and try to cram in another 65504.

You with biscuits, go to the confectionery aisle and only pick items from there, pile em as high as you can. And you two, mix it up, stash stuff from everywhere else, as wider selection with as many different prices as possible, from £5 puddings to 40 pence peaches.”

As you’ll have astutely surmised in this increasingly contrived parable, the items removed from shelves are chunks of data – £5 = 512k and 40p = 4k – while the shoppers with their trolleys, symbolize software scurrying around the drive and accumulating read commands.

Customer’s one and two, with their broad assortment of sustenance, equate to random read requests from the SSDs memory, whilst our voracious biscuit addict, represents sequential reads.

“Once you’ve finished,” Norman instructs. “Roll those baskets back to the checkouts, relax, and allow our solicitous packer in chief to scan and bag your rations ready for the world outside. He looks a little odd, he was made redundant as a circus juggler because the tumblers and tiger tamers felt upstaged by his act, but don’t let his size or his 65536 arms intimidate you.

He completed our training program with flying colours, his coordination is second to none, he can multi task as seamlessly as any woman of woman born. If the machines fail, he knows every bar-code and price by heart, he’s remarkably convivial and best of all, since each of his hands can serve a separate checkout, he’s over 65000 times cheaper to employ. We’re don’t actively seek to undercut the minimum wage but when someone applies for a job out of pure passion and appears uncannily well-qualified, why deprive them of the pleasure of fulfilling their purpose?

Finally, allow me to introduce another inspiring incentive devised to prevent the anti-social sterility of on-line foraging from perniciously eroding our ability to interact. Each of you privileged customers has been appointed a personal stock checker with a trolley that matches the dimensions of yours, along with their own inverted checkout. Let’s call it a check-in. As you choose supplies, your entire harvest will be studiously logged with replenishments conducted as required and tactful dietary suggestions made in anticipation of your next gracious visit. Please don’t let us down.”

As you’ll have keenly deduced, our appendage endowed swiping supremo is posing as the hard drive’s controller. The stock checkers are our customer’s polar opposites and represent applications making write requests, whilst the items in their respective trolleys denote random and sequential segments of data, though this time, they are being written to the drive.

Of course, for Mr. Evans’s sensational scheme to catch fire, he needed enough shoppers and stock minders to engage all the checkouts, with each able to fill their trolley to the brim and manoeuvre it with the proficiency of Aryton Senna powering his way to pole in Monaco. However, no further hungry souls wandered into the store that day and try as they might to take advantage of an offer that was too true to be bad, none of our lucky trio or their devoted assistants could stack more than 32 items on their checkout’s conveyor belt for our ambidextrous phenomenon to dispatch or receive, leaving him with an abundance of next customer please signs and 655350 idle hands to scratch his puzzled head.

If fate itself were conspiring to thwart a heavenly resurgence of high-street consumerism, its cruel finger was aimed squarely at Norman’s slumped and dejected figure. Fortunately, as months passed, our customer’s genetics altered and they began to whirl their wheelers with enhanced vigour. Their stacking techniques improved, and word of the store’s extraordinarily generous promotions saturated its sleepy community. Soon, cavernous and once stark aisles were brimming with a bustling and sociable populous.

Trolleys stood docked at every checkout, buried beneath ambrosial delights. Packets, boxes, bottles, bags and jars weaved lines of irregular dominoes across the floor, twisting around pyramids of weekend deals as do myriad queues snake from ice-cream parlours on shimmering summer afternoons and the magnanimous monster of many tentacles, his juggling skills now ceaselessly sought and applied with mesmeric panache, gave every enraptured shopper his most enchanting smile as he picked and packed their produce to nourish as nature intended. Not good enough? Tough, for this analogy is hereby terminated.

The Negative Side of Nullified Volatility

As NVMe had materialised during a transitional period in the digital storage industry, when choice was more stifling than liberating, manufacturers wilfully snatched confusion from the jaws of simplicity and ensured the resulting drives took on more forms than a shape-shifter constructed from genetically modified polymorph.

I shall refrain from regurgitating information it was as taxing for me compile as for intrepid readers to assimilate, but for any literary masochists wishing to indulge in a generous portion of facts pertaining to the anti-revolutionary revolution, a reasonably coherent chronicle resides…around about here. For the rest, a refreshingly succinct alternative shall make an appearance in a couple of scrolls.

Weather we wistfully hanker after the days of swapping 25 floppy disks to savour five frames of pre-rendered animation, of man handling breeze block sized hard drives whose platters could barely accommodate a lemming’s autobiography or of securing mortgages to purchase CD-ROM drives that took longer to install windows than a for a bogus builder to install windows in a house, the aplomb of our paperless archivists lay at the mercy of four parameters;

1: The bandwidth of the interface.

2: The speed of the media.

3: The versatility of the protocol.

4: How effectively software exploited all three.

When examining the chart above, many might ask how Muhskin’s Scorpion, G.Skill’s Blade and OCZ’s Revodrive were able to keep Intel’s and Samsung’s competition honest, despite all three being native “SATA-rists” and untouched by the magic of NVme. The answer is simple, these cards cunningly circumnavigated the limitations imposed by the SATA interface and its ageing protocol by relying on multiple drives and controllers to communicate over separate PCI-E lanes.

Though they were identified as a single drive by the OS, every one consisted of four individual SSDs, each armed with a”Sandforce” controller, and ultimately linked to 8 second generation PCI-E lanes via an additional RAID controller. This yielded a theoretical bandwidth almost six times higher than would have been permissible over the SATA bus and added the ability to address three extra command queues in parallel.

For reference, I have included a set of results attained by my own beloved warhorse in the same benchmark. Discerning or returning technophiles will have probably noted that my adopted chassis since Spring 2013 was the refined flagship of Lian Li’s imperial “Armorsuit” convoy, the PC-P80N. Secluded within its silky aluminium shell was a rampant quartet of Crucial M550 drives, a terabyte a piece, hosted by Areca’s ARC-1883x, a PCI-E 3.0 x8 a dedicated raid controller card which brandished a dual core 1.2 GHZ processor and 2GB of ECC memory.

As can be observed, in terms of sequential aptitude, it outshines every rival save for Intel’s 3700, which takes the lead in the write test but falls behind on reads.

For 512K random reads and writes, it is again the best of the bunch and by an impressive margin. Scattered writes at 4k are on a par with all except the NVMe drives, while 4k random reads see it head the field once more. For 4k blocks at maximum queue depth, its reads place it fourth overall though it limps across the line last in the write test.

Altogether, the setup cost approximately £2380, which sounds extortionate but breaks down as 59p per gigabyte, making it the second cheapest on show. An arguably enticing alternative, especially for those who believed the marriage of SATA and AHCI had long, healthy years left to astound and were loathed to defend their ill-judged investments!!!

Nevertheless, one can also infer from those florid florescent bars that both Intel’s and Samsung’s solids gathered significant momentum when tasked with random reads and writes and were able to nose ahead once data chunks became smaller and command queues lengthened. Thus, even in its tender infancy, we were granted a tantalizing glimpse of this incredible protocol’s potential once applications had been primed to pose sterner tests. Such was the quantum leap it provided , that many declared every other key component in our systems would need upgrading several times before it could possibly re-emerge as a hindrance. All that remained was to ruefully cogitate over which bottle would be next to rear its ugly neck.