In the world of Cinema I have no patience for prequels. A Prequel is what occurs when a group of shrewd producers with more cash than creativity are too lazy, shorn of ideas or scared to confront the rigorous challenge of crafting a commendable sequel and decide they will better charm critiques and audiences by recruiting subjugated writers and submissive directors to tell a story that ends three minutes, years or centuries before the original one began.

Annoyingly it works. The notion that we are being shown something historically significant as opposed to merely “what happened next” is potent enough for prequels to procure disproportionate levels of respect, even when their plots, characters, scenery, scores and scripts and are as disjointed, one dimensional, lacklustre and cliché ridden as those of any sequel.

But wait. Didn’t George Lucas produce write and direct every one his prequels? Were they all poorly thought out? And what about Batman? Christopher Nolan, an submissive director? Sooner could you convince me that this creature is Lucifer incarnate.

Point one, a cat is a witch’s familiar, so not a million miles from his unholy highness. Point two, the Star Wars prequels hardly evoke universal acclaim, frankly, Phantom Menace was a was a pot-boiler. As for the flying mouse, nobody said there weren’t exceptions, but if I allowed every one of them to govern my analogies, you’d be staring at a blank page. So shut up!

In other areas of consumerism, prequels of a different kind arise to satisfy intelligent nostalgia and these, I see far greater sense in.

Many Audiophiles construct sound arguments singing the praises of analogue intimacy, valve bound richness and vinyl warmth, making microphones such as the Gemini 2 from SE Electronics and Linn’s sensational Sondek LP12 turntable entirely worthy reincarnations throughout eras of icy digital clarity.

Enough cyclists scoff at the Garmin gawping, Lycra laced, gel propelled road racer to give stable steel frames, infallible hub gears and handsome chrome trimmings a sustained and noble presence amidst a mercurial subculture saturated with Carbon fibre fads and Titanium trends.

Motor minded traditionalists with petrol on the brain are justified in their desire to embody an eternity of automotive ecstasy within the timeless chassis and sumptuous tan leather lining of that Morgan they ordered during the Cold War.

Vineyards would love to discover how to ferment Tutankhamen’s Charidney at will, but they can’t. Tutankhamen only drank red wine.

When we enter the realm of Computers, where the luxuries of fleetness and efficiency take ruthless precedence over impractical arguments to preserve a slow and cumbersome past, there is only scope for sequels, unless we consider limited editions, customised character, or laptops like this…

…and even then, the “period features” permeate the aesthetics and not the components.

In every other respect, the mere notion of an I.T prequel precipitates a paradox that defies common sense since all those conceivable have already been composed.

“Mr Cook? Mr Iovine? Listen, I have this fantastic idea for a prequel to the iPad Air.”

“Excuse me? We did that, it was the iPad 3.”

“Mr Shin, please, don’t buy Jupiter, it would be a disastrous investment for Samsung. Awkward commuting past Mars, through that beastly asteroid belt, plus the land is way too gassy to build plants on. Save the cash for my project. Its a prequel that tells the story of what happened before the Galaxy S6.”

“Sorry friend, I may heed your planetary advice a plump for Venus, just as soon as the acid rain stops. But how much more could be said in a prequel to the S6 than what the S5 has already revealed?

“Green Eyes, Red Beard. Lay down your transistors, cast aside you texels, forget your ROP driven lust for revenge and let’s make a prequel that divulges every event leading up to the first ever 28nm GPU.

Red Beard (aka. AMD): “What? You mean in the way the Social Network chronicled Facebook’s technical rise and moral fall? Granted, there may be a movie in that.”

Green Eyes (aka. Nvidia): “I fancy that it would be as tiresome watching Steam’s progress bar and as pointless as filming yourself watching Steam’s progress bar.”

Red Beard: “What, just because the grotesque money sucking gravy train that was your odious Kepler failed to emerge before my vibrant and virtuous Tahiti?”

They’ll be time a plenty for pettiness later in the article. For now think practically. I don’t mean a picture prequel. I mean a physical one. An embodiment, something you could hold, install and utilize like any visually inclined device.

Green Eyes: “Nonsense. Even if it were somehow possible to amalgamate my Fermi flagships with his Cypress canoes to a create a tawdry silicone tribute, who would be interested in reminiscing over yesterday’s rendering?

A video card is designed, forged, ordered, delivered, torn open, then sentenced to a gruelling fate inside a gamer’s galley until its core is crippled and its memory massacred, whereupon it is ripped from its dusty manacles and cast adrift to make way for a more powerful oarsman.

The only time it sees light is when un-boxed. It is only ever glimpsed is through a lens and even though our loyalists love to savour factory freshness, handsome heat sinks and machined élan, frame rates are what perpetuate their profitable addiction.”

Come now, you sadistic narrow-minded curmudgeon, A world exists outside your jaded dominion. Are you seriously asserting one couldn’t capitalize on a lamenting a Quake veterans’ yearning to revive a little ancient Voodoo Magic?

Green Eyes: A Voodoo curse my friend, that I destroyed before its unspeakable evil could corrupt my faithful followers. Move on with your wretched musings.

The intrinsic elements of a sequel for the big screen are startlingly similar to those that propel subsequent generations of hardware and software to a house from a warehouse, especially in the case of action movies.

Once a film has evolved into a well oiled franchise, and a mobile phone into a stressed mother’s right hand, the “golden rules” that instigate each set of selling points are virtually interchangeable and akin to a lapsed Pilgrim’s Christmas list. Bigger, better, faster, prettier more of…..please Santa.

These certainties do not apply uniformly to every technological sequel. A combination of eco awareness, design ingenuity and an instinct to elude Moore’s law causes manufacturers to occasionally refrain on amounts, engineer smaller solutions, prioritize style over substance and vice versa.

Vista might have been prettier than Windows XP though few blessed with a brain would claim it was better. Conversely, those old enough to swoon at the mention of Cooler Master’s sublime ATC-110 would be ridiculed if they to declared it more thermally qualified than the brutish Stackers, Storms and Cosmoses that severed its legendary lineage. Intel’s Ivy Bridge-E housed close to half a billion fewer transistors than it’s elder, the Sandy Bridge-E and if anybody suggested the Enigma to be smaller than a Nuc, their sense of perspective would be the subject of grave concern.

There is however, one omnipotent variable that is in constant ascent throughout the evolution of all desktops, laptops, tablets and mobiles, and every key component they incorporate.

It’s name is Speed. In technology’s mercurial domain, no respected entity has ever wilfully designed a slower sequel, because to every one of them, any hint of a theory that slower could somehow be superior is as preposterous as insisting that a tortoise could win a race, despite what Aesop might tell you.

Which brings us, albeit scenically, to sequels of a decidedly graphic nature. Are we talking Aliens or Alien Resurrection? The Godfather Part 2, or Father of the Bride Part 2? Final Destination 4 or Fast and Furious 404, a movie so deplorable, it was never made. You’ll note that I have now resorted to alternately naming good and bad sequels for no apparent reason. The point being, in these particular “picture studios”, the “sequential” philosophy was all but redundant.

Their “productions” could be ugly to behold, extravagantly budgeted, grossly proportioned and have as many superfluous characters as the Civil Service yet still garner approval from the the fans, respect from the hacks and smash all Box Office records provided they were shorter than the director’s cut of Ghandi : The Nazi Years. Nvidia’s next sequel was always “better”, because it was always “faster”. AMD’s latest chapter could never be “worse”, because the ending never dragged, even if the projector didn’t support Freesnyc.

The question was, had Red Beard acquiesced to avarice and and embraced the same exorbitant pricing policy as his aquamarine adversary. How much would he charge for a ticket to an “HBM” screening of 3d Mark. Was the Fiji to yield the first solo captained vessel in his own fleet of “hyper flagships”? Would its rugged crew of rasters and streamers masterfully manoeuvre the canons upon its mighty 4096 foot deck and mercilessly maim the malevolence roaming the violent verdant abyss beneath? Or would a “Titanic torpedo” spiral from its icy depths and penetrate an otherwise impervious prow.

Can I really be bothered to tell you or should I just post a pile of links to reviews that use worlds like configurability and incremental, expressions such as “wholly implemented” and “perceived variance in probable percentile terms” and devote five yards of HTML to raving about yet another stupidly sophisticated dynamic over-clocking scheme designed to monitor temperature, wattage drain, air quality, case décor, radiation levels and psycho kinetic disturbances, before providing half a megahertz of core return based on the players height, weight, credit rating, marital status, medical history, Twitter profile, Steam reputation, daily calorie expulsion, sleeping habits and readiness to relate to chronically depressed GPUs.

Time for some light relief me thinks. Here’s a nice fresh hot table.

Oh dear me! It is a most provoking thing when one sets an article up to analyse a piece of hardware with as much potential to influence its demographic as a sequel to caffeine, only to have another sneakily pre-empt it.

Evidently, upon seeing a towering army of ruddy cumulonimbus roll ever closer to his thriving fields, a restless and twitchy Green Giant clearly felt compelled to act, for fear a flawless cultivation may be flooded by a crimson deluge and scoured with scarlet lightening.

At the Titan X’s lavish launch, with an air of jocular cynicism , PCPer’s eminent Ryan Shrout attempted to establish a release date for the 980ti and was informed by one of the jade kingdom’s chief disciples, Tom Peterson, that a lose tongue had incurred the displeasure of his master once too often and no information would or could be divulged.

When promoting the card, he had also stated that it harnessed the Maxwell in its holiest form and doubled the spoils of its Kepler based elder without expending a single supplementary watt .

Once a ferocious flurry of Crysis crippling, Mordor mangling and forum fisticuffs had subsided, actual speed bumps resided in the region of 60 and 80% relative to the original Titan, whilst the Gk110B, which primed latter’s darker and deadlier descendant, reduced this bold assertion to hollow hype.

Nevertheless, to maintain a 250 watt TDP for its fourth crown jewel in succession and deliver such a notable gain to frenzied frame fiends was an admirable accomplishment, especially in the growing heat of AMD’s apparent willingness to forsake the rainforests if it meant a virtual forest could be rendered three seconds faster.

No clearer evidence exists of Nvidia’s assiduous eco awareness than the quantitative discrepancy in components between the Titan X and AMD’s two year old R9 290x and the current necessary to ignite their talents. The GM200 bore 256 extra stream processors 16 further texture units, 32 more Rops and almost 2 billion additional transistors but was able to deploy these and all the rest within an thermal envelope 40 watts lower than the Hawaii and elevate performance by 40%.

In the 980 ti, users of either the 780 and 780ti were presented with an enticing upgrade at a broadly agreeable bounty. Even owners of a vanilla 980, whose cravings could never be satisfied if not for that chocolate flake, would have likely nurtured no grudges in cutting their losses on auction sites and re-investing to procure healthy injection of pace. However, for those who’d already sweet-talked their spouses, forgone vacations, blown tuition fees or sold kidneys to acquire a Maxwell Rich Blend, this cost effective remedy left a sour taste in the gullet.

The inaugural Titan verged on being a concept card, thrust before a crowd of wealthy enthusiasts with intent to seize the singles crown from the HD 7970 and seduce folding smitten content creators with higher quality engineering, vast VRAM reserves, the significant advantage of double precision units and an aura of illustrious exclusivity previously reserved for Nvidia’s elite business class products.

When its trimmed down tuned up siblings materialised to bestow first person fetishists with a financially viable alternative to the r9 290x, Titan adopters could nurse their bitterness with the comforting knowledge that peerless floating point fortitude remained their unique privilege.

The 780ti had played host to an unrestrained Kepler, whose 15 streaming multiprocessors harboured a euphoric chorus of 2886 Cuda cores clocked at a scintillating 920mhz, a greater number and a higher frequency than its founding father but no double precision acceleration and half the memory. As once might guess, this translated to loftier frame rates and inferior mathematical aplomb.

The Titan X however, represented the definitive realisation of Mammoth Maxwell. No further cores could be accommodated and oscillations had reached their peak. A “Titan X Black” would remain a fantasy unless glowering Greeneyes discovered a way to “magically improve the clocks”, he didn’t. Hence, the 980ti was bound to these maximums and couldn’t transcend the abilities of its elder in any respect.

In keeping with tradition, its Ram was halved from 12 gigs to 6 and most importantly, where the 780ti had included a full complement of 15 SMXs to the Titan’s 14, the 980ti was artificially shorn of a pair, reducing its quota from 24 to 22. With all this considered, was their any logical reason to argue that Nvidia’s approach failed to demonstrate a consistent and canny assessment of its customer-base? If the Titan had managed to amicably co-exist alongside a slighter and more reasonable derivative, why couldn’t the Titan X make peace with its own? There were two reasons and the first concerns timing less sensitive than death metal at a nun’s funeral.

If we discount the ready-salted 780, which the Titan’s numerical and pictorial prowess surpassed, a period of eight months elapsed before the emergence of a cheaper top tier card offering equal or enhanced performance.

By contrast, in turning the 980ti loose less than two months after what was touted as the ultimate answer to a deep learner’s prayers as well as those of every Steamer from Middle Earth to South Vvardenfell, a routinely meticulous fleet commander could be said to have torpedoed his own flagship, despite holding true to his statements and dutifully adhering to prior form.

Additionally, in electing at the outset to eliminate the “Doppler effect” on the GM200 in order to maximise die space and augment single precision agility, Nvidia had arguably discarded far more marketable commodity to the compute-concious than either extra memory or additional standard cores.

Perhaps worst of all, the 980ti’s physical deficits had been predicted to convert to a handicap of roughly 8%, though once review samples had been shredded by their clinical and brutal interrogators, this already meagre figure was swiftly revised to 3%, and for a card over 35% less taxing on the wallet.

To put this into novel and amusing and perspective, if opting for a Titan X over its skinnier side kick, the buyer would pay approximately 50 cents for every “xtreme” 3d Mark gained, 70 dollars per frame gained at 1440p and 115 dollars for each frame gained at ultra HD, a going rate to rival that of the Louvre Museum.

But what of the future? In a world where eye candy was sweeter than saccharine and more enchanting than Violets dancing to Verdi, would’t a 12 gig buffer become imperative once anti aliasing made edges look smoother than a Harley street patient and 4k was defined as yesterday’s resolution? Unlikely. As things stood on the recreational front, 4 gigs was ideal, 6 gigs was a luxury and once games were coded to exploit 12 gigs, this X rated Titan’s Achilles would not be its memory but rather, but King Maxwell himself!

Q the Forums

Nvidia-Ite Five unanswered uppercuts from Nvidia since the amp guzzling atrocity that was the R9 295×2, and every single one quieter, prettier, and over-clock-able without tearing a hole in the stratosphere. Does this really need to go to points or should we retire AMD and declare the 28nm war fought and won. Its immoral to allow such pulverizing punishment to continue when a company is so manifestly incapacitated, the long term effects could be devastating. Board room dissension, fan-boy desertion and certainly no more lucrative contracts from Apple, unless Tim Cook discovers a constructive way to market melting iMacs.

AMD-Ite Uppercuts? What fight are you watching? More like five wild sucker punches from a frustrated green eyed monster who’s been unable to land a hurtful blow since the opening bell and is now becoming desperate. Whatever happened to his cagey opportunism? It might not have grabbed the judges attention but his tight defence in rounds three through five at least showed some semblance of a strategy.

To wait for Red-beard to throw his hay-making Hawaiian hook before responding with that clubbing Kepler counter was a tactical master-stroke. Now all has succumbed to recklessness. He thought the Maxwell combo, that sharp left cross (GTX 980) and bone cruncher to the body (Titan X) would seal the deal, but AMD took it and grinned through its big red gloves. He should have held off, skated away, tried to steal it on points but instead, he rushed forward like a flailing drunk. A hundred shots thrown and not a single one landed. Plan A has gone to pieces plan B doesn’t exist, can’t you sense he’s panicking?

Nvidia-Ite An inventive twist on my pugilistic analogy that carries as much weight as a Crane Fly’s jab. Since the Maxwell, your man has barely risen from his stool. You know why? Because he never believed this would go the distance and punched himself out by the 3rd. Hardly surprising since conserving energy has never been his forte. A total of 1990 watts expended in the battle to be crowned this node’s champion compared to Nvidia’s 2535. Sounds encouraging until you consider he’s extracted five less cards from it. That’s an average of almost 100 watts less per GPU and you assert we’re not pacing ourselves? All AMD has been able to do since the 980 is slash prices to compensate for slowness. Mark my words, if this onslaught continues, the maroon mauler might never render again.

AMD-Ite Ever Heard of Rope a dope? What you assume to be submissive silence is actually a product launch more clinically coordinated than a presidential campaign, the 24th of June will be the 8th round in Zaire and the Fiji, Ali’s surgical finisher to Foreman’s Titanic jaw.

Oh, and the last time I checked, the r9 295×2 was still king of mountain, despite five free title shots in the form of the Titan Black, the Titan-Z, the 980, the Titan X and this latest farce. Harp on about three broken crossfire profiles within a plethora of over 20000 games. Spread toxic propaganda about the miseries of micro-stutter even when the most forensic analyses have confirmed they are all but expunged. It matters not. Once those tortuous tests have looped themselves into limbo and visions wavy foliage and fluffy doughnuts preclude peaceful slumber, Vesuvius still roars louder than any Titan.

Nvidia-Ite Provided you’ve obtained the requisite planning permission to install it, and by roar, I assume you mean its fan. Come on, the Titan X instantly relegated all dual boards to relics and good riddance, who wants one now? They’re cumbersome, impractical, uneconomical and a source of dire aggravation for driver developers.

AMD-Ite The Titan-Z certainly was, more than 15% slower than the 295 for a sum that would buy you a year’s vacation on the Moon.

Nvidia-Ite But far more over clock able, entirely air cooled, and 125 watts left for neighbours.

AMD-Ite If you’re really type to dither over every amp, you’d have ditched your desktop long ago live in a house with app controlled solar panels, no kettle or toaster, a cardboard microwave, a vegan oven, a fridge that lectures you on global warming whenever you defrost it and an iPad at every power point telling you how many hours you need to huff and puff on the cross trainer to boil half an egg.

Nvidia-Ite No one’s pretending dream gaming rigs are monuments to mother nature but when you’re burning more fossil fuels during a Black ops killing spree than a Dodge Viper down route 66 you need to wake up and smell the ozone.

AMD-Ite – We’re all at it. What are you, an Al Gore tribute act? Allow me to hoist you on your own petard. Tell me which is worse, Nvidia pumping out 10 video cards with a collective TDP of 2.5 kilowatts or AMD releasing five with one of below 2? Is it better to make twelve Smart Cars or six Subarus, get the gist? The material and production costs alone obliterate any conservation gained by the the lower average .

Nvidia-Ite Not when you account for the net consumption of all those Radeon’s roasting inside customers’ cases.

AMD-Ite. Which skews things even further in our favour. Who do you think sells more cards?

Nvidia-Ite. Precisely. Perhaps your margins would improve if your philosophy wasn’t so profligate. The Fiji is going to be water cooled, do you get that? Fluid dependant. A single chip card that demands liquid relief straight out of the box. A first of its kind, in the worst possible context. It’s as good as cheating. If Nvidia had played by those rules by now, we’d be marvelling at Middle Earth in 8k at 100fps with a Maxwell cool enough to freeze mercury. Have you seen the figures being banded around? We’re talking a TDP of 375 watts for ONE GPU, the same as the Titan-Z needed for two and 45 more than for a pair of GTX 980’s.

AMD-Ite. Poisonous fallacies worthy of the tackiest tabloids. Had you endeavoured to cleanse your tainted conscience with tincture of truth you’d have discovered there is an air-cooled counterpart.

Nvidia-Ite. Really? Does it double up as a drone to save on shipping costs?

AMD-Ite. If it guzzled as much as the Titan-Z it could fly your kids to Disneyland, but to negate your second fictitious claim, its TDP is actually 275 watts.

Nvidia-Ite – Still hungrier than the Maxwell.

AND-ite – And worth it. The technology is unprecedented, high bandwidth memory, AMD’s fattest die yet, over a thousand more stream processors than the Hawaii. Close to 9 billion transistors. To have achieved all that, saved 15 watts and raised tempos by almost fifty percent is one hundred percent astounding.

Nvidia-Ite – Oh? Now who’s making rash predictions, where did you get that figure?

AND-ite – From the same unsullied source as the last one.

Nvidia-Ite. They could have shaved off another watt if they’d skipped on those naff LEDs

AND-ite. Do I detect envy or is your face always that colour?

Nvidia-Ite. It’s just a bout of nausea, brought on by your attempts at humour and a graphics card that resembles a breeze block on a ventilator.

AND-ite. First, I suggest you casually browse the meanderings of this wayward and whimsical website until an exceptionally fact saturated table slides into view. Wait. What’s that? I believe I sense one approaching.

AND-ite. Second, I assume you didn’t time-shift E3. Just as well really, Jaws scarred swimmers for life, you’d have never gone near another monitor if you’d seen AMD’s presentation. Lisa Su’s poise and charisma is matched only by her passion for peripheral perfection and palpable devotion to the ingenious team she leads.

Nvidia-Ite. I’d rather have drink with Tom Peterson.

AMD-Ite – Even if he refused to pay and your pint arrived partially unlocked?

Nvidia-Ite. I’m a teetotaler.

AMD-ite. Green Tea by any chance?

Nvidia-Ite. Is there such a thing?

AMD-ite. You should get out more.

Nvidia-Ite. You should learn what sarcasm is.

AMD-ite. Forgive me, my wit doesn’t plumb such depths. Anyway, the Fiji’s imperial vessel is called the Fury X, one and a half times more prolific per watt than the 290x and your efforts to tarnish its sublimity only invite ridicule. It is as stunningly sculpted as the Elgin Marbles, as cute to behold as puppies cuddling piglets and oozes more quality than a Chocolatier’s legacy.

Nvidia-Ite. Especially when it melts.

AMD-ite. Honestly, lay off thermal jibes get some new material and check back into reality. Thanks to Coolermaster’s ingenuity this beautiful creature has a typical load temp of 50 degrees, that’s 30 degree undercut on your infernal contraptions.

Nvidia-Ite. So what? For an amphibious card I should damn well hope so. Take a look at EVGA’s Hybrid 980ti. A refashioned air cooler fused with a pre-assembled closed loop, stock clocks over 150mhz higher than the reference version and what a surprise, an identical load temperature.

AMD-ite. You mean, a grotesque retro fitted appendage that further defiles a dated design. Uttering it in the same breath as the Fury brings breakfast to my throat. How can that ghastly mutant compare with a stylish chassis of die cast aluminium, smoothly coated in silken midnight nickel with sensuously tactile side panels. Illuminated logos on the underside and outer edge. An elegantly crafted bracket engraved with the Radeon emblem. Its stainless, vent-less surface fostering a trio of display-ports topped off by a second gen HDMI input. All neatly packed onto a card scarcely larger than the the radiator that disperses its clement warmth.

Nvidia-ite. Clement? Assuming your address is 101 Hades Avenue.

AMD-ite. Better Hades Avenue than Price Gouge City. No more fouled USB headers, no more blocked m.2 slots SATA sockets or mobo switches. Acoustics quieter than autumn leaves and armfuls of room for airflow. Think of the potential. Monstrous rigs with greater compute capacity than last year’s server farms, yet as silent as a mouse’s shadow in a monastery. ITX builds with ATX brunt.

Motivation for app weary sofa surfers to make a quantum leap into role playing paradise.

How many PlayStation phobics who covet a device of equal proportions will endeavour to forge a “Box” of their “Sweetest” and “Steamiest” fantasies around seven sensational inches of scintillating graphical bravura. And for fools who still doubt that small can be deadly, regard the R9 Nano and drool.

Swifter than a 290, half the length and twice as frugal on fuel. An irresistible opportunity for inspired OEMs to breed armies of savage super NUCs to liberate bedrooms and lounges hitherto subjected to years of tortuous tyranny beneath console communism’s steely sky. These cards aren’t merely cards, they’re our saviours, They’ll immortalize PCs, bring an end to insufferable ports ruined by idle programmers, preserve the honourable art of bespoke builds and prevent the hardware hoarder’s tragic descent into redundancy.

Nvidia-Ite. A compelling and nauseating eulogy that almost had me convinced, save for one colossal spanner in the pipelines.

AMD-ite. Your lack of imagination?

Nvidia-Ite. No, your lack of observation. AMD evidently doesn’t share your radicalism, no HDMI 2 I’m afraid, only 1.4, I hardly need remind you out that means a nice fat cap of 30hz on televisions that don’t have display ports which, last time I checked, was approximately all of them! Isn’t that wonderful? A graphics card twice as pricey as a PS4 with over three times the pixel prowess, but deprived of the ability to showcase it in the same environment, bang goes your PC revolution.

AMD-ite – Well, with displayport 1.2a and Freesync, perhaps HDMI’s days are numbered. It can’t be long before we start seeing an influx of DP TVs

Nvidia-Ite. Ha! So we just invest on the off chance? Which one of us should count the minutes?

AMD-ite – They’ll be adapters this summer.

Nvidia-Ite At $80 each and manufactured by Startech no doubt. A company that compensates for the clumsy oversights of others and a life saving remedy for abandoned consumers. All those poor little Nano’s foundering at sea, cast adrift by their callous crimson mother ship. “Help us.” They howl in desperation as salty waves swell around their capacitors. “We were promised sanctuary in cosy living rooms not a dark and icy death. Those consoles were supposed to be the outcasts, not us. Heaven help us, please, save us. Hark! For I hear a distant hum. Look! Do you see that, on the horizon, can it be? Are we to be saved? Yes! Hooray! The Super Startech lifeboat has rescued our rasterisers.

AMD-ite – If only they made an adaptor to convert your flaky fables into common sense.

Nvidia-Ite – Or to adapt your arguments with intelligence and subtlety .

AMD-ite – Maybe AMD will bundle them.

Nvidia-Ite Maybe pigs will fly. Maybe a 4 gig frame buffer really is enough.

AMD-ite – Either there are no bounds to your stupidity or scaremongering just became an Olympic sport.

Nvidia-Ite – Not at all. I was merely pointing out that while piling fancy RAM high may sell fast at ultra HD, 4 gigs was last year’s minimum. If you’re a sucker for slideshows, you’re in for a treat.

AMD-ite The memory wall is a limitation that AMD were mindful of from the get go and elected to suffer in their quest for speed. Had they opted for GDDR5, we’d have all been bored to death by you squawking over every milliamp per byte the Fiji expended.

The principal objectives of HBM are peerless bandwidth, a reduced form factor and minimal power consumption, while all three are accomplished by moving the chips closer to the GPU and assembling them in stacks. For this initial implementation, each die has a capacity of 2 gigabits, each stack is constrained to a height of four dies and a total of four stacks can be arranged around the GPU. 8 gigabits per stack, one gigabyte in a gigabit hence, an overall tally of 4 gigabytes.

Nvidia-Ite – Fascinating. I had assumed they just thought stacks looked prettier and my utmost gratitude for teaching me to add up. My point however remains. Shadow of Mordor, Crysis 3, Dying Light, Skyrim stuffed with texture packs. With optical opulence at its sweetest, all of these games and others are apt to gobble up 4 gigs faster than a blue whale engulfs 4 tonnes of krill , even at 1080p. What happens at 4k and with multi screen configs? Sorry, but a Titan X is the only way to ensure a secure, stutter free future.

AMD-ite – Please! The Hawaii’s signature was Ultra HD. A 290x traded frames with a Titan despite a 2 gig deficit and regardless of eye candy. Think of it this way, in business throwing money in ever increasing quantities at a problem rarely alleviates it, success is ultimately governed by distribution. Likewise, with many technical obstacles, the solution lies not in the numbers, but how effectively they’re deployed. When you consider that every single megabyte on the Fury XT is effectively served by a stream processor and a one bit bus, you’ll begin to comprehend the scale of AMD’s feat and appreciate how it will debunk the illusion that greater equals better

Nvidia-Ite – Think you’ll find the Maxwell massacred that myth a while ago. Slimmer bus than the Kepler, fewer transistors, smaller die, less cuda cores, trimmed texture units, a lower TDP and yet, 10% nippier.

AMD-ite – Correct, but you forgot the most important deficit, 4 gigs to the Titan Black’s 6, never troubled the 980 did it? Yet here you are declaring it won’t suffice for the Fiji. Perhaps it’s time I withdrew and let you wrap up this debate alone, who knows, you may surprise yourself and win.

Nvidia-Ite – Months have passed since then, in the Steam community that’s an ice age. If four gigs can still cut it, why slap 12 on the Titan X?

AMD-ite – To nourish a profitable fairy tail at the Fiji’s expense. In light of dumping double precision it was their only option.

Nvidia-Ite – Are you serious? Take another look at that table. If your beloved ruler is so confident of his ground breaking design, then why the need for a yet another shambolic re-brand? The 390 and 390x both have Hawaii GPUs, so what the hell is a Granada? Is he pretending these chips are brand new? You know people are convinced they’re Tonga re-spins with GCN 1.2 architecture? At least with the 280 he had the decency to keep the Tahiti name. More significantly, why double up on the RAM, if four gigs is genuinely enough.

AMD-ite – Don’t know how anyone could conclude the 390s were gen three GCN. The 380 has been public knowledge for weeks. That’s the Tonga paraphrase, it follows the 285 and m295X.

Nvidia-Ite – Ah yes, the m295X. A mobile top dog with such promise and so warmly welcomed by entranced Appalachians…until it scorched a granny smith sized hole in their golden delicious displays.

AMD-ite – You really are a Cox. As for the heftier Hawaii’s, it’s devilishly astute marketing when you acknowledge their leaner prices. I love it. Re-branding without an attractive bonus would be illogical. As things stand now, how many of your seething comrades who willingly parted with over $400 to acquire their shiny new 4, sorry, 3.5 gigabyte GTX 970s will be tempted to snatch an octogig 390 and flee the verdant valley once and for all?

Nvidia-Ite – It’s last minute cover in case HBM doesn’t pay off. They’ve jumped ship too soon. They should have sat out first cycle and probably the second.

AMD-ite – Quit grasping at straws, there’s not the slightest connection. Search all you want, you’ll find no evidence of any repute that demonstrates 4 gigs doesn’t cut the candy. The R9s and 980s more than stand firm with optimum profiles applied in every noteworthy title. Even a 780 is enough 90% of the time. Choppy action at hyper resolutions is down the GPU itself showing wrinkles, a game’s heavy reliance on the CPU, inadequate system memory, buggy drivers, or plain poor coding. VRAM is an influential resource, though not nearly as pivotal as Nvidia has willed you to believe.

Nvidia-Ite –I suggest you research more thoroughly. A growing contingent venturing beyond 1080p are being forced to sacrifice any form of anti anti-aliasing and use customised pre-sets with lighting effects, draw distance, shadow detail and similar retinal spoils relegated to “medium”. Dying Light, Dragon Age Inquisition and Crysis 3 grind every 4 gig card into the dust at 4k. If rumours are accurate the Fiji’s fiery wrath will be ridden and weathered by a 980ti, never-mind a Titan X.

AMD-ite – Since when did 4k become gaming’s raison d’etre? Just because Nvidia’s been selling in harder than home insurance doesn’t mean its future is certain.

Nvidia-Ite – A rather different tune to the one you were singing a moment ago when you deemed it the Hawaii’s party piece.

AMD-ite – And so it shall be the Fiji’s but AMD never hyped 290s as mega High Def maestros. This was subsequently established by reviewers, most of whom used crossfire rigs because no single card at that time could provide a playable 4k experience. The Titan X was explicitly billed as being able to attain “acceptable” frame rates at 2160p, which in Nvidia doubletalk translated to a 40fps, meaning frequent sags below 20, how many seasoned veterans would be content with that?

Nvidia-ite – Seems reasonable to me.

AMD-ite – Indeed, and to all others plodding along with their monitors are still capped at 60hz, yet to reap the virtues of voluptuous variability. It’s ironic that you’ve overlooked another major attraction your God recently attempted to hijack. Did it ever occur to you that AMD might be targeting the Freesync fraternity? What’s wrong with 1080p and 1440p now that we can break the 60 barrier and still bask in buttery smoothness with not a tear shed or tear in sight.

Nvidia-Ite – Oh really? 1080p might excite those console commoners, but us? Role playing royalty, kings of eye-candy, we who revel at the spectacle of our colleague’s thunderstruck countenance and crestfallen slump as they lament the prospect of having to shuffle home and suffer Skyrim on their XBOX in plain old HD?

AMD-ite – Something tells me they’d be equally perturbed by the spectacular sluggishness of 30 fps in place of 130.

Nvidia-Ite – Those resolutions are CPU staggered, pick any video card from the last 5 years and chances are it would top the magic 100 with a stalled fan.

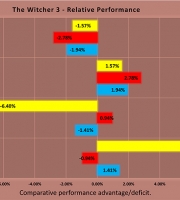

AMD-ite – Yet more astounding ignorance. Metro Last Light, Thief, Ryse: Son of Rome Assassin’s Creed Unity and The Witcher 3 all have a Titan X sweating to sustain an 80 average. A GPU can be persuaded to push plenty of pixels at the low end of the spectrum. Quiver in mortal terror at what the Fury could do by employing that bountiful bus to stimulate every last sliver of its frame buffer. We may witness 150fps across the board.

Nvidia-Ite – If that’s really what folks are after, why not simply grab a pair of 980s and clock them to cuckoo-land? Almost as easy on the environment, quicker and not much pricier.

AMD-Ite One Fury X will be all you need to brutalize Battlefield.

Nvidia-Ite And two will be all you need to start another cold war.

AMD-Ite – Keep up those clumsy witticisms, and in two weeks be you’ll receive the biggest punchline of your life. Where in the name of Unigine Heaven are you sourcing your 980s? $500 a piece is the current base rate. $1000 for two or $659 for a Fury X. I’d call that a pretty substantial.

Nvidia-Ite – You’d get 30% more pace.

AMD-ite – In that case cough up another $320, marry two Furies and you’ll climb another 30%

Nvidia-Ite – As soon as AMD gets round to releasing crossfire patches for games that SLI users completed six months earlier. Have you seen the leaked Firestrike Benchmark, the Fury barely shades a stock 980ti. The game’s over.

AMD-Ite Aren’t those the same results that show the Fury besting the Titan X in the Ultra test? Should I still be worried about it choking at 4K? Besides, basing your opinions on pre-launch exhibitions and benchmarks run with nascent beta drivers is like predicting the outcome of an F1 race after the first practice session. Cheap and childish gamesmanship.

Nvidia-Ite Well? What about those seeking double or triple delights? Take your pick. Three volcanic voltage vivisectionists tethered to awkward and ponderous cooling, crammed into a towering inferno and itching to erupt at 100 degrees within three seconds of Fur Mark or, a trio of whirring whispering artisans, over clocked to the bleeding edge of their trembling transistors and yet, as cool in the heat of Battlefield as a Camel beneath the sun’s anvil.

AMD-Ite. Your argument is as robust as a runt frame. That cooler is rated to dissipate 500 watts despite the Fury pulling nigh on half that on the ragged edge. If you believe for one fleeting polygon that this thing won’t over-clock like ten thousand Rolex watches on a swiss train in a time warp you’re delusional. AMD may as well have inscribed it with the words “crank me up or I will cry”.

Nvidia-Ite. If that really were the case perhaps you’d like to explain why they’ve hobbled the air cooled version. Lower core speed, less compute and texture units and 500 fewer stream processors. Looks like they struggled so much with yields that they only felt confident to stick the full fat chip under water. Frankly that’s a cop out. They literally bottled it.

AMD-Ite. What’s the matter, been rattled by some of the early projections? Those 4k scores for Tomb Raider and Far Cry got you running scared? Allow me to chase you down and compound your suffering. Here’s a link to a spellbinding showcase of every captivating feature and indispensable innovation the Fury will offer its visionary adopters along with a compendium of results from benchmarks conducted on two reference systems, with all relevant settings and specifications fully disclosed. Thirteen legitimate tests executed under typical conditions with approved drivers. No suspicious leaks from unverified sources, no sly selectivity or misleading interpretation, all data officially recorded by AMD and impartially published in unabridged form. Do my eyes deceive me, or is that a Fury whitewash?

Nvidia-Ite. The most skewed set of statistics since NATO last submitted a tax return. The title alone was enough to convince me I’d be reading something as impartial Che Guevara’s manifesto. Reviewer’s Guide??? Do we live in a dictatorship?

“Dear Mr. Mega Technophile of Transistor Times Weekly, we’d be most honoured if you’d review our product and will happily furnish you with a sample. Here is a list of popular applications we suggest you try and the hardware configurations we used to obtain the enclosed results. We believe these parameters to be the optimum foundation for demonstrating what the world’s greatest graphics card can deliver and advise that you are diligent in replicating them.

Should your figures deviate from ours, please double check every setting and component, if they still fail to correspond, either re-publish our results or the next parcel we send you will be ticking. Now we know what happened to poor old Kitguru.”

AMD-Ite. You’re more shaken than a maraca in a tumble dryer.

Nvidia-Ite. I’m not in the least concerned. AMD has always played to a gallery stat junkies who harvest their results from five minute intervals of benchmarks and time demos. Remember the 290X? Glowing reports from glory hunting hacks praising how it trumped the Titan for half the cash only for it to later emerge that several crucial sources had relied on hand picked samples that maintained higher frequencies than retail parts. Note the guileful spiel on those slides. “Typical board power. Up to 1050mhz. Doesn’t any of that ring a hells’ bell? Why power it via dual 8 pin PCI-E sockets if it only sucks 300 watts.

AMD-Ite. Nothing devious their. Just another tantalizing goad, a way of saying to tweakers ; “If you don’t push the Fury to it’s incandescent peak, you’re a craven coward who’d be better off going green, so to speak. It’s rated to absorb 375 watts, that’s headroom of 100 watts to play with, somewhat more generous than the miserly 25 a Maxwell gives you before its draconian limiters deny an over-clockers freedom.

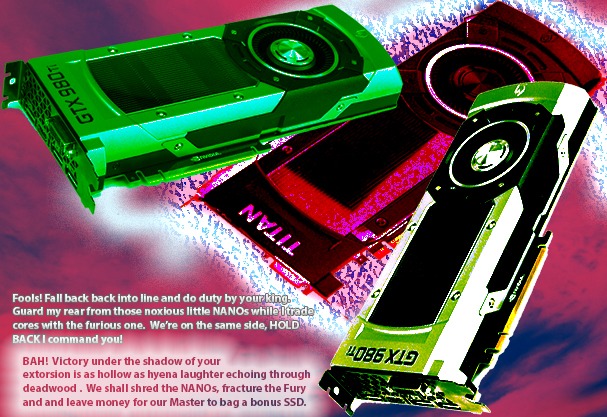

Nvidia-Ite. For your sake I’m relieved. It’ll need every one of them if it wants to compete with beasts like this.

10% up on a Titan X out of the traps with 150mhz left in the tank, and that’s on AIR.

AMD-Ite. HA! So the fact that Nvidia were lazy, arrogant and presumptuous enough to recycle the same cooler for the fifth time in succession means you now have to rely on after-market boards to salvage a scrap of respect, I wish I’d sold tickets for this! What about the other bonuses you’ve glossed so smoothly over? The real-time VU with DIP definable LEDs.

Nvidia-Ite. I have a prettier one on my mixing desk.

AMD-Ite. Asynchronous compute engines, an exclusive feature of GCN’s architecture guaranteeing the most efficient and reliable Direct X 12 experience anybody could envisage.

Nvidia-Ite. This sounds hauntingly familiar.

AMD-Ite. They can harness the API’s unique facility to execute sophisticated graphical routines and shading operations in parallel, minimizing latency, while the host system’s workload will be more evenly distributed across multi-core CPUs, removing the bottleneck DX11 precipitated when one core became overly utilized. Coders and developers can now evolve their expertise and enjoy an unfettered line of communication with the machinery, allocating the hardware’s resources more practically and with immeasurably greater intuition.

Nvidia-Ite. More political guff. The industry has been harping on about CPU optimization for over half a decade and still, the most strenuous games barely bother a quad core chip. You’d think from that sickening self-publicising that AMD had invented ASYNC Shaders. The Maxwell can process simultaneous tasks just as effectively and supports every DX 12.1 function. Next please.

AMD-Ite. VSR, Virtual super resolution to enhance visual detail on non-Ultra HD monitors. Images are rendered at 2160p then scaled down in accordance with the display’s innate dimensions resulting in a quasi anti-aliasing effect indistinguishable from the real thing and rivalling the quality of native 4k content at negligible expense to performance.

Nvidia-Ite. So essentially identical to Dynamic super resolution. Nice work on the re-brand, a whole words’ worth of difference. Next.

AMD-Ite. Target Frame Rate Control for the user to nominate a specific frame rate which the card will then dynamically enforce by attenuating the large spikes on load screens and menus or during less intensive activity, hence eliminating needless power consumption. Supremely flexible over clocking providing a means to achieve the definitive balance between thermals, acoustics and efficiency via adjusting the target GPU temperature, fan speed, desired power usage and core frequency up to 50 and 90% over their stock values with the finest granularity and absolutely no TDP limit in sight.

Nvidia-Ite. Oh, spare me any more of this puerile promotional piffle and let’s see some genuine numbers. My friend, we both know where this is going. Journalistic jubilation followed by gradual and grim realisation that when submitted to impartial scrutiny under the merciless conditions that only a certified endurance gamer can induce, the Fury will flounder and fade. Let’s see exactly how willing it is to over-clock after an hour of Dying Light, let’s see how much VRAM is left half way through an evening of GTA 5 and how many how watts it gorges in an Elder Scrolls weekend. The core will crumble, the frame buffer will fold while the Titan and the Ti tear through textural martyrdom without dropping a megahertz.

AMD-Ite. Right. I think that’s the last toy out of the pram. You have one day left to dream.

___________________________________

The Fiji’s genetics were nothing if not radical, though our tenacious turquoise apologist was partially correct. The fact Redbead had seen fit to imbue the supreme incarnation of his masterpiece with aquatic instincts at birth meant he had all but conceded the struggle for carbon credits and would rely on raw pace to retain his ruby legions, recruit free agents, including five people who refused to part with their Voodoo 5 6000, and force betrayed acolytes wandering the perimeters of lush grassy meadows to submit to the wicked wonders of a Scarlett wilderness.

Furthermore, his decision to elevate memory to unprecedented heights at a considerable cost to its volume was as reckless as a gambler tossing his last quarter on 44 red in raging defiance of luckless misery. If his timing was perfect, 4 gigs would ferry his fervent followers through the next textural tempest, but if wasn’t, and that little white marble rattled into the jaws of a gaping green zero, a triumphant emerald casino would collect. I wonder how he got on?